Making thematic maps has traditionally been the preserve of a ‘proper’ GIS, such as ArcGIS or QGIS. While these tools make it easy to work with shapefiles, and expose a range of common everyday GIS operations, they aren’t particularly well-suited to exploratory data analysis. In short, if you need to obtain, reshape, and otherwise wrangle data before you use it to make a map, it’s easier to use a data analysis tool (such as Pandas), and couple it to a plotting library. This tutorial will be demonstrating the use of:

- Pandas

- Matplotlib

- The matplotlib Basemap toolkit, for plotting 2D data on maps

- Fiona, a Python interface to OGR

- Shapely, for analyzing and manipulating planar geometric objects

- Descartes, which turns said geometric objects into matplotlib “patches”

- PySAL, a spatial analysis library

The approach I’m using here uses an interactive REPL (IPython Notebook) for data exploration and analysis, and the Descartespackage to render individual polygons (in this case, wards in London) as matplotlib patches, before adding them to a matplotlib axes instance. I should stress that many of the plotting operations could be more quickly accomplished, but my aim here is to demonstrate how to precisely control certain operations, in order to achieve e.g. the precise line width, colour, alpha value or label position you want.

Package installation

This tutorial uses Python 2.7.x, and the following non-stdlib packages are required:

- IPython

- Pandas

- Numpy

- Matplotlib

- Basemap

- Shapely

- Fiona

- Descartes

- PySAL

The installation of some of these packages can be onerous, and requires a great number of third-party dependencies (GDAL & OGR, C & FORTRAN77 (yes, really) compilers). If you’re experienced with Python package installation and building software from source, feel free to install these dependencies (if you’re using OSX, Homebrew and/or Kyngchaos are helpful, particularly for GDAL & OGR), before installing the required packages in a virtualenv, and skipping the rest of this section.

For everyone else: Enthought’s Canopy (which is free for academic users) provides almost everything you need, with the exception ofDescartes and PySAL. You can install them into the Canopy User Python quite easily, see this support article for details.

Running the Code

I find IPython Notebook best for this: code can be run in isolation within cells, making it easy to correct mistakes, and graphics are rendered inline, making it easy to fine-tune output. Opening a notebook is straightforward: run ipython notebook --pylab inlinefrom the command line. This will open a new notebook in your browser. Canopy users: the Canopy Editor will do this for you.

Obtaining a basemap

We’re going to be working with basemaps from Esri Shapefiles, and we’re going to plot some data about London on a map of London on a choropleth map. Here’s how to get the basemap, if you’re an academic user:

- http://edina.ac.uk/census/

- Log in with your university ID

- Go to “Quick Access to Boundary Data”

- Select “England, “Census boundaries”, “2001 to 2010″

- Select “English Census Area Statistic Wards, 2001 [within counties]“

- Click “List Areas”

- Select “Greater London”

- Click “Extract Boundary Data”

- Download and unzip the file somewhere, say a

data directory

Obtaining some data

We’re going to make three maps, using the same data: blue plaque locations within London. In order to do this, we’re going to extract the longitude, latitude, and some other features from the master XML file from Open Plaques. Get it here. This file contains data for every plaque Open Plaques knows about, but it’s incomplete in some cases, and will require cleanup before we can begin to extract a useful subset.

Extracting and cleaning the data

Let’s start by importing the packages we need. I’ll discuss the significance of certain libraries as needed.

from lxml import etree

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.cm as cm

from matplotlib.collections import PatchCollection

import matplotlib.font_manager as fm

from mpl_toolkits.basemap import Basemap

from shapely.geometry import Point, Polygon, MultiPoint, MultiPolygon, shape

from pysal.esda.mapclassify import Natural_Breaks as nb

from descartes import PolygonPatch

import mpl_toolkits.basemap.pyproj as pyproj

import fiona

from itertools import chain

Now, we’re going to extract all the useful XML data into a dict.

tree = etree.parse("data/plaques_20130119.xml")

root = tree.getroot()

output = dict()

output['raw'] = []

output['crs'] = []

output['lon'] = []

output['lat'] = []

for each in root.xpath('/openplaques/plaque/geo'):

# check what we got back

output['crs'].append(each.get('reference_system'))

output['lon'].append(each.get('longitude'))

output['lat'].append(each.get('latitude'))

# now go back up to plaque

r = each.getparent().xpath('inscription/raw')[0]

if isinstance(r.text, str):

output['raw'].append(r.text.lstrip().rstrip())

else:

output['raw'].append(None)

This will produce a dict containing the coordinate reference system, longitude, latitude, and description of each plaque record. Next, we’re going to create a Pandas DataFrame, drop all records which don’t contain a description, and convert the long and lat values from string to floating-point numbers.

df = pd.DataFrame(output)

df = df.replace({'raw': 0}, None)

df = df.dropna()

df[['lon', 'lat']] = df[['lon', 'lat']].astype(float)

Now, we’re going to open our shapefile, and get some data out of it, in order to set up our basemap. However, Basemap is fussy about the coordinate reference system (CRS) of the shapefile it uses, so we’ll have to convert ours to WGS84 before we can proceed. This is accomplished using the ogr2ogr tool, on the command line:

ogr2ogr -f "ESRI Shapefile" england_caswa_2001_n.shp england_caswa_2001.shp -s_srs EPSG:27700 -t_srs EPSG:4326

If you’re interested in what this does, see here for a little more detail. With the conversion complete, we can get some data from our shapefile:

shp = fiona.open('data/england_caswa_2001_n.shp')

bds = shp.bounds

shp.close()

extra = 0.01

wgs84 = pyproj.Proj("+init=EPSG:4326")

osgb36 = pyproj.Proj("+init=EPSG:27700")

ll = (bds[0], bds[1])

ur = (bds[2], bds[3])

coords = list(chain(ll, ur))

w, h = coords[2] - coords[0], coords[3] - coords[1]

We’ve done two things here:

- extracted the map boundaries

- Calculated the extent, width and height of our basemap

We’re ready to create a Basemap instance, which we can use to plot our maps on.

m = Basemap(

projection='tmerc',

lon_0 = -2.,

lat_0 = 49.,

ellps = 'WGS84',

llcrnrlon=coords[0] - extra * w,

llcrnrlat=coords[1] - extra + 0.01 * h,

urcrnrlon=coords[2] + extra * w,

urcrnrlat=coords[3] + extra + 0.01 * h,

lat_ts=0,

resolution='i',

suppress_ticks=True)

m.readshapefile(

'data/england_caswa_2001_n',

'london',

color='none',

zorder=2)

I’ve chosen the transverse mercator projection, because it exhibits less distortion over areas with a small east-west extent. This projection requires us to specify a central longitude and latitude, which I’ve set as -2, 49.

# set up a map dataframe

df_map = pd.DataFrame({

'poly': [Polygon(xy) for xy in m.london],

'ward_name': [w['name'] for w in m.london_info],

})

df_map['area_m'] = df_map['poly'].map(lambda x: x.area)

df_map['area_km'] = df_map['area_m'] / 100000

# Create Point objects in map coordinates from dataframe lon and lat values

map_points = pd.Series(

[Point(m(mapped_x, mapped_y)) for mapped_x, mapped_y in zip(df['lon'], df['lat'])])

plaque_points = MultiPoint(list(map_points.values))

wards_polygon = prep(MultiPolygon(list(df_map['poly'].values)))

# calculate points that fall within the London boundary

ldn_points = filter(wards_polygon.contains, plaque_points)

Our df_map dataframe now contains columns holding:

- a polygon for each ward in the shapefile

- its description

- its area in square metres

- its area in square kilometres

We’ve also created a series of Shapely Points, which we’ve constructed from our plaques dataframe, and a prepared geometry object from the combined ward polygons. We’ve done this in order to speed up our membership-checking operation significantly. The result is a Pandas series, ldn_points, containing all points which fall within the ward boundaries.

The two functions below make it easier to generate colour bars for our maps. Have a look at the docstrings for more detail – in essence, one of them discretises a colour ramp, and the other labels colour bars more easily.

# Convenience functions for working with colour ramps and bars

def colorbar_index(ncolors, cmap, labels=None, **kwargs):

"""

This is a convenience function to stop you making off-by-one errors

Takes a standard colourmap, and discretises it,

then draws a color bar with correctly aligned labels

"""

cmap = cmap_discretize(cmap, ncolors)

mappable = cm.ScalarMappable(cmap=cmap)

mappable.set_array([])

mappable.set_clim(-0.5, ncolors+0.5)

colorbar = plt.colorbar(mappable, **kwargs)

colorbar.set_ticks(np.linspace(0, ncolors, ncolors))

colorbar.set_ticklabels(range(ncolors))

if labels:

colorbar.set_ticklabels(labels)

return colorbar

def cmap_discretize(cmap, N):

"""

Return a discrete colormap from the continuous colormap cmap.

cmap: colormap instance, eg. cm.jet.

N: number of colors.

Example

x = resize(arange(100), (5,100))

djet = cmap_discretize(cm.jet, 5)

imshow(x, cmap=djet)

"""

if type(cmap) == str:

cmap = get_cmap(cmap)

colors_i = concatenate((linspace(0, 1., N), (0., 0., 0., 0.)))

colors_rgba = cmap(colors_i)

indices = linspace(0, 1., N + 1)

cdict = {}

for ki,key in enumerate(('red','green','blue')):

cdict[key] = [(indices[i], colors_rgba[i - 1, ki], colors_rgba[i, ki]) for i in xrange(N + 1)]

return matplotlib.colors.LinearSegmentedColormap(cmap.name + "_%d" % N, cdict, 1024)

Let’s make a scatter plot

# draw ward patches from polygons

df_map['patches'] = df_map['poly'].map(lambda x: PolygonPatch(

x, fc='#555555', ec='#787878', lw=.25, alpha=.9

, zorder=4))

plt.clf()

fig = plt.figure()

ax = fig.add_subplot(111, axisbg='w', frame_on=False)

# we don't need to pass points to m() because we calculated using map_points and shapefile polygons

dev = m.scatter(

[geom.x for geom in ldn_points],

[geom.y for geom in ldn_points],

5, marker='o', lw=.25,

facecolor='#33ccff', edgecolor='w',

alpha=0.9, antialiased=True,

label='Blue Plaque Locations', zorder=3)

# plot boroughs by adding the PatchCollection to the axes instance

ax.add_collection(PatchCollection(df_map['patches'].values, match_original=True))

# copyright and source data info

smallprint = ax.text(

1.03, 0,

'Total points: %s\nContains Ordnance Survey data\n$\copyright$ Crown copyright and database right 2013\nPlaque data from http://openplaques.org' % len(ldn_points),

ha='right', va='bottom',

size=4,

color='#555555',

transform=ax.transAxes,

)

# Draw a map scale

m.drawmapscale(

coords[0] + 0.08, coords[1] + 0.015,

coords[0], coords[1],

10.,

barstyle='fancy', labelstyle='simple',

fillcolor1='w', fillcolor2='#555555',

fontcolor='#555555',

zorder=5

)

plt.title("Blue Plaque Locations, London")

plt.tight_layout()

# this will set the image width to 722px at 100dpi

fig.set_size_inches(7.22, 5.25)

plt.savefig('data/london_plaques.png', dpi=100, alpha=True)

plt.show()

![Plaque Locations Scatter Plot]()

We’ve drawn a scatter plot on our map, containing points with a 50 metre diameter, corresponding to each point in our dataframe.

This is OK as a first step, but doesn’t really tell us anything interesting about the density per ward – merely that there are more plaques found in central London than in the outer wards.

Creating a Choropleth Map, Normalised by Ward Area

# create a MultiPoint which we can check for set membership

df_map['count'] = df_map['poly'].map(lambda x: int(len(filter(prep(x).contains, ldn_points))))

df_map['density_m'] = df_map['count'] / df_map['area_m']

df_map['density_km'] = df_map['count'] / df_map['area_km']

# it's easier to work with NaN values when classifying

df_map.replace(to_replace={'density_m': {0: np.nan}, 'density_km': {0: np.nan}}, inplace=True)

We’ve now created some additional columns, containing the number of points in each ward, and the density per square metre and square kilometre, for each ward. Normalising like this allows us to compare wards.

We’re almost ready to make a choropleth map, but first, we have to divide our wards into classes, in order to easily distinguish them. We’re going to accomplish this using an iterative method called Jenks Natural Breaks.

# Calculate Jenks natural breaks for density

breaks = nb(

df_map[df_map['density_km'].notnull()].density_km.values,

initial=300,

k=5)

# the notnull method lets us match indices when joining

jb = pd.DataFrame({'jenks_bins': breaks.yb}, index=df_map[df_map['density_km'].notnull()].index)

df_map = df_map.join(jb)

df_map.jenks_bins.fillna(-1, inplace=True)

We’ve calculated the classes (five, in this case) for all the wards containing one or more plaques (density_km is not Null), and created a new dataframe containing the class number (0 – 4), with the same index as the non-null density values. This makes it easy to join it to the existing dataframe. The final step involves assigning the bin class -1 to all non-valued rows (wards), in order to create a separate zero-density class.

We also want to create a sensible label for our classes:

jenks_labels = ["<= %0.1f/km$^2$(%s wards)" % (b, w) for b, w in zip(

breaks.bins, breaks.counts)]

jenks_labels.insert(0, 'No plaques (%s wards)' % len(df_map[df_map['density_km'].isnull()]))

This will show density/square km, as well as the number of wards in the class.

We’re now ready to plot our choropleth map:

plt.clf()

fig = plt.figure()

ax = fig.add_subplot(111, axisbg='w', frame_on=False)

# use a blue colour ramp - we'll be converting it to a map using cmap()

cmap = plt.get_cmap('Blues')

# draw wards with grey outlines

df_map['patches'] = df_map['poly'].map(lambda x: PolygonPatch(x, ec='#555555', lw=.2, alpha=1., zorder=4))

pc = PatchCollection(df_map['patches'], match_original=True)

# impose our colour map onto the patch collection

norm = Normalize()

pc.set_facecolor(cmap(norm(df_map['jenks_bins'].values)))

ax.add_collection(pc)

# Add a colour bar

cb = colorbar_index(ncolors=len(jenks_labels), cmap=cmap, shrink=0.5, labels=jenks_labels)

cb.ax.tick_params(labelsize=6)

# Show highest densities, in descending order

highest = '\n'.join(value[1] for

_, value in df_map[(df_map['jenks_bins'] == 4)][:10].sort().iterrows())

highest = 'Most Dense Wards:\n\n' + highest

# Subtraction is necessary for precise y coordinate alignment

details = cb.ax.text(

-1., 0 - 0.007,

highest,

ha='right', va='bottom',

size=5,

color='#555555',

)

# Bin method, copyright and source data info

smallprint = ax.text(

1.03, 0,

'Classification method: natural breaks\nContains Ordnance Survey data\n$\copyright$ Crown copyright and database right 2013\nPlaque data from http://openplaques.org',

ha='right', va='bottom',

size=4,

color='#555555',

transform=ax.transAxes,

)

# Draw a map scale

m.drawmapscale(

coords[0] + 0.08, coords[1] + 0.015,

coords[0], coords[1],

10.,

barstyle='fancy', labelstyle='simple',

fillcolor1='w', fillcolor2='#555555',

fontcolor='#555555',

zorder=5

)

# this will set the image width to 722px at 100dpi

plt.tight_layout()

fig.set_size_inches(7.22, 5.25)

plt.savefig('data/london_plaques.png', dpi=100, alpha=True)

plt.show()

![Density Per Ward Choropleth]()

Finally, we can create an alternative map using hex bins. These are a more informative alternative to point maps, as we shall see. The Basemap package provides a hex-binning method, and we require a few pieces of extra information in order to use it:

- the longitude and latitude coordinates of the points which will be used must be provided as numpy arrays.

- We have to specify a grid size, in metres. You can experiment with this setting; I’ve chosen 125.

- setting the

mincnt value to 1 means that no bins will be drawn in areas where there are no plaques within the grid.

- You can specify the bin type. In this case, I’ve chosen

log, which uses a logarithmic scale for the colour map. This more clearly emphasises minor differences in the densities of each bin.

The code:

# draw ward patches from polygons

df_map['patches'] = df_map['poly'].map(lambda x: PolygonPatch(

x, fc='#555555', ec='#787878', lw=.25, alpha=.9, zorder=0))

plt.clf()

fig = plt.figure()

ax = fig.add_subplot(111, axisbg='w', frame_on=False)

# plot boroughs by adding the PatchCollection to the axes instance

ax.add_collection(PatchCollection(df_map['patches'].values, match_original=True))

df_london = df[

(df['lon'] >= ll[0]) &

(df['lon'] <= ur[0]) &

(df['lat'] >= ll[1]) &

(df['lat'] <= ur[1])

]

lon_ldn = df_london.lon.values

lat_ldn = df_london.lat.values

# the mincnt argument only shows cells with a value >= 1

# hexbin wants np arrays, not plain lists

hx = m.hexbin(

np.array([geom.x for geom in ldn_points]),

np.array([geom.y for geom in ldn_points]),

gridsize=125,

bins='log',

mincnt=1,

edgecolor='none',

alpha=1.,

lw=0.2,

cmap=plt.get_cmap('Blues'))

# copyright and source data info

smallprint = ax.text(

1.03, 0,

'Total points: %s\nContains Ordnance Survey data\n$\copyright$ Crown copyright and database right 2013\nPlaque data from http://openplaques.org' % len(ldn_points),

ha='right', va='bottom',

size=4,

color='#555555',

transform=ax.transAxes,

)

# Draw a map scale

m.drawmapscale(

coords[0] + 0.08, coords[1] + 0.015,

coords[0], coords[1],

10.,

barstyle='fancy', labelstyle='simple',

fillcolor1='w', fillcolor2='#555555',

fontcolor='#555555',

zorder=5

)

plt.title("Blue Plaque Density, London")

plt.tight_layout()

# this will set the image width to 722px at 100dpi

fig.set_size_inches(7.22, 5.25)

plt.savefig('data/london_plaques.png', dpi=100, alpha=True)

plt.show()

![Hex-binned Plaque Density Hexbin]()

In a future post, I’ll be discussing Geopandas…

I believe that the geospatial industry has lagged behind the general IT landscape, with concepts like “Big Data” and “Cloud Computing” taking longer to gain a solid foothold. Buzzwords aside, technology has changed a lot but much of the traditional GIS industry has not been aware of these changes. This will be a pivotal year, fueled in part by open source communities that think outside the box, solve real problems, and don’t need to work at the speed of a large proprietary monopoly.

I believe that the geospatial industry has lagged behind the general IT landscape, with concepts like “Big Data” and “Cloud Computing” taking longer to gain a solid foothold. Buzzwords aside, technology has changed a lot but much of the traditional GIS industry has not been aware of these changes. This will be a pivotal year, fueled in part by open source communities that think outside the box, solve real problems, and don’t need to work at the speed of a large proprietary monopoly.

Ten years ago, when PostGIS was at 0.8 and the world was fresh and new, I was pretty convinced our industry was on the cusp of an open source revolution. When folks got a taste of the new, flexible, free tools for building systems they’d naturally discard their legacy proprietary software and swiftly move on to a more enlightened existence. I felt excitement, and wind in my hair.

Ten years ago, when PostGIS was at 0.8 and the world was fresh and new, I was pretty convinced our industry was on the cusp of an open source revolution. When folks got a taste of the new, flexible, free tools for building systems they’d naturally discard their legacy proprietary software and swiftly move on to a more enlightened existence. I felt excitement, and wind in my hair.

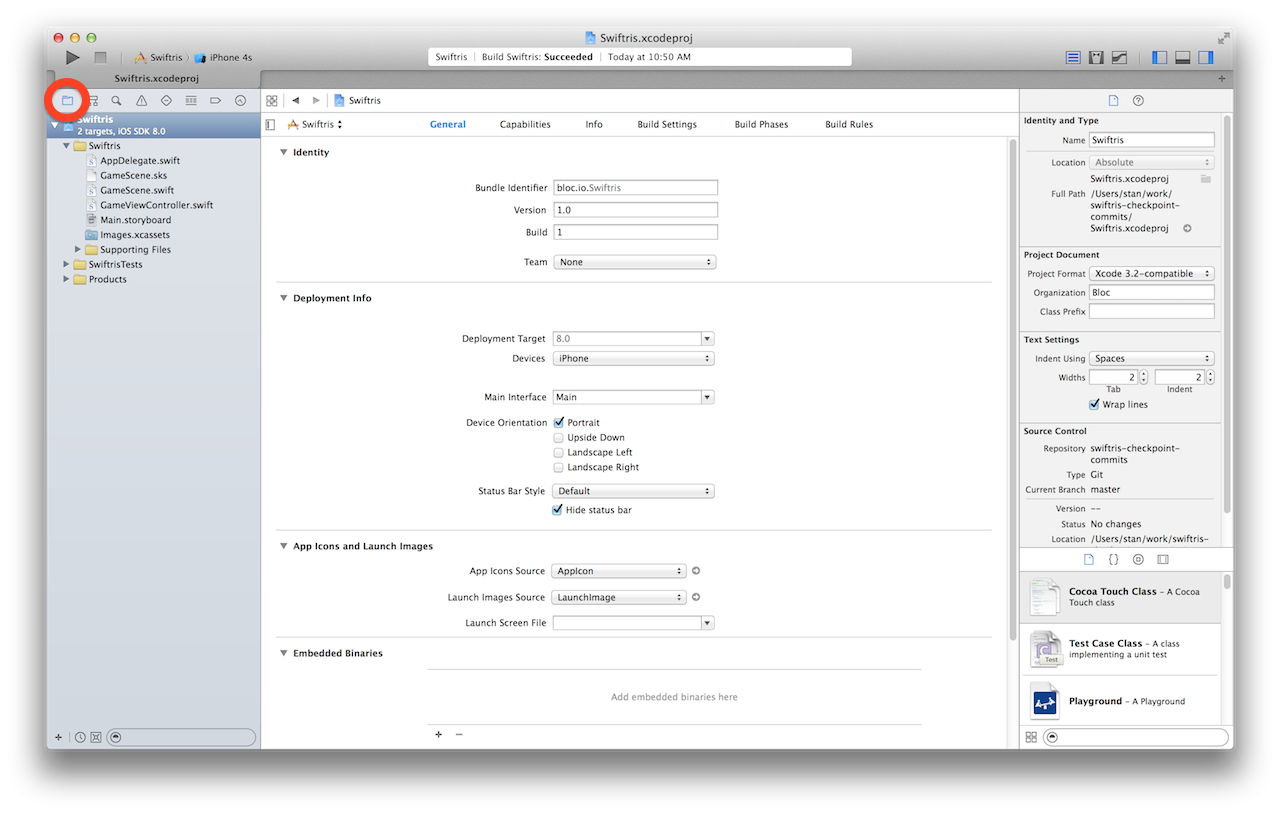

Before we start playing with blocks, you should know the tools we’ll be using: Swift, SpriteKit and Xcode.

Before we start playing with blocks, you should know the tools we’ll be using: Swift, SpriteKit and Xcode. Or

Or When the new project window appears, choose Game from under the iOS > Application category and press Next.

When the new project window appears, choose Game from under the iOS > Application category and press Next. The next window beckons you to customize options for your project. Fill out the fields described in the table below:

The next window beckons you to customize options for your project. Fill out the fields described in the table below:

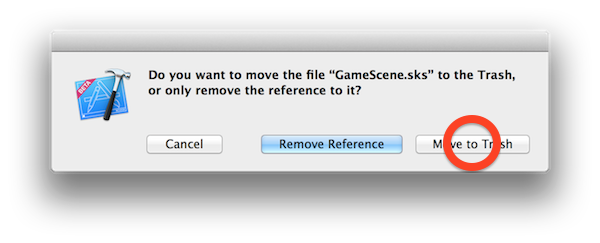

Right-click

Right-click  When asked to confirm, make sure to choose Move to trash:

When asked to confirm, make sure to choose Move to trash: To get rid of the aimless space ship once and for all, click the

To get rid of the aimless space ship once and for all, click the

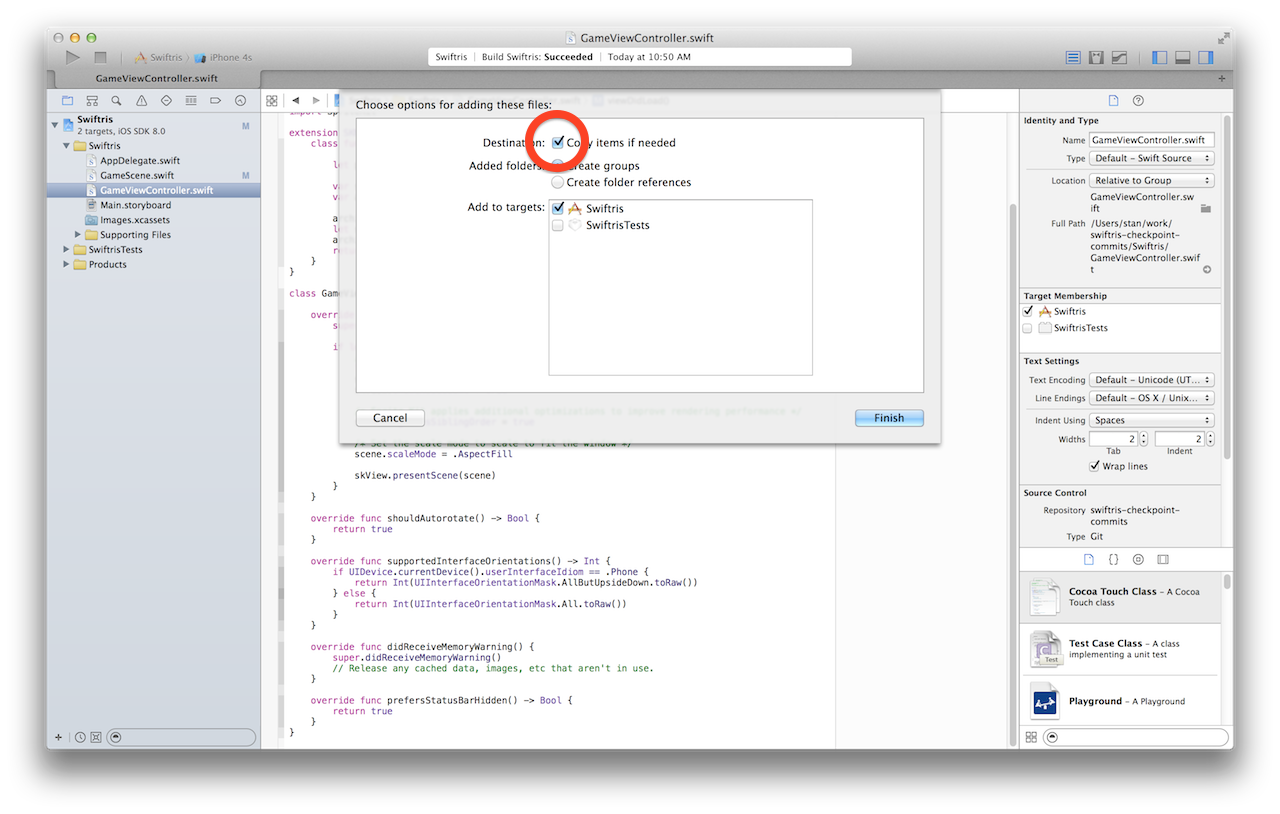

Make sure to check the Copy items if necessary option. This will place a copy of the directory and all of the sound files within it into your Swiftris project and directory. Click Finish. Repeat this task with the

Make sure to check the Copy items if necessary option. This will place a copy of the directory and all of the sound files within it into your Swiftris project and directory. Click Finish. Repeat this task with the PostGIS 2.0 will enable a new class of Heroku applications that leverage location data. Whether you are looking to

PostGIS 2.0 will enable a new class of Heroku applications that leverage location data. Whether you are looking to