Deep learning for complete beginners: Recognising handwritten digitsby Cambridge Coding Academy | Download notebook

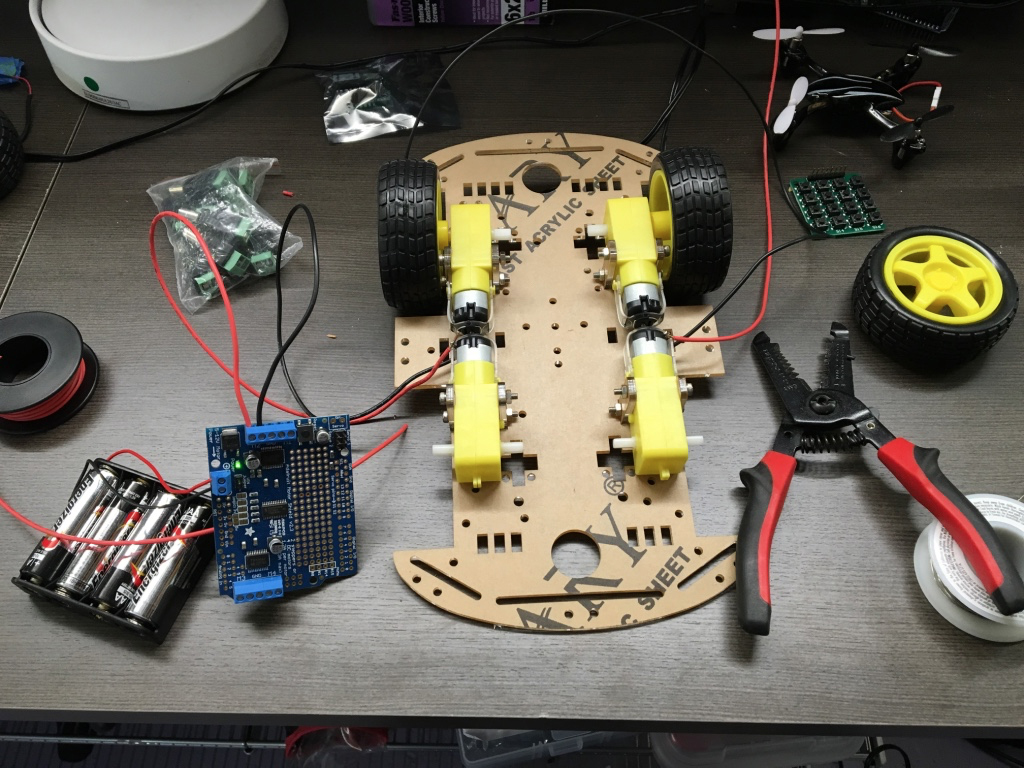

Welcome to the first in a series of blog posts that is designed to get you quickly up to speed with deep learning; from first principles, all the way to discussions of some of the intricate details, with the purposes of achieving respectable performance on two established machine learning benchmarks: MNIST (classification of handwritten digits) and CIFAR-10 (classification of small images across 10 distinct classes—airplane, automobile, bird, cat, deer, dog, frog, horse, ship & truck).

| MNIST |

CIFAR-10 |

![]() |

![]() |

The accelerated growth of deep learning has lead to the development of several very convenient frameworks, which allow us to rapidly construct and prototype our models, as well as offering a no-hassle access to established benchmarks such as the aforementioned two. The particular environment we will be using is Keras, which I’ve found to be the most convenient and intuitive for essential use, but still expressive enough to allow detailed model tinkering when it is necessary.

By the end of this part of the tutoral, you should be capable of understanding and producing a simple multilayer perceptron (MLP) deep learning model in Keras, achieving a respectable level of accuracy on MNIST. The next tutorial in the series will explore techniques for handling larger image classification tasks (such as CIFAR-10).

(Artificial) neurons

While the term “deep learning” allows for a broader interpretation, in pratice, for a vast majority of cases, it is applied to the model of (artificial) neural networks. These biologically inspired structures attempt to mimic the way in which the neurons in the brain process percepts from the environment and drive decision-making. In fact, a single artificial neuron (sometimes also called a perceptron) has a very simple mode of operation—it computes a weighted sum of all of itsinputs ⃗xx→, using a weight vector ⃗ww→ (along with an additive bias term, w0w0), and then potentially applies an activation function, σσ, to the result.

![]()

Some of the popular choices for activation functions include (plots given below):

– identity: σ(z)=zσ(z)=z;

– sigmoid: especially the logistic function, σ(z)=11+exp(−z)σ(z)=11+exp(−z), and the hyperbolic tangent, σ(z)=tanhzσ(z)=tanhz;

– rectified linear (ReLU): σ(z)=max(0,z)σ(z)=max(0,z).

![]()

Original perceptron models (from the 1950s) were fully linear, i.e. they only employed the identity activation. It soon became evident that tasks of interests are often nonlinear in nature, which lead to usage of other activation functions. Sigmoid functions (owing their name to their characteristic “S” shaped plot) provide a nice way to encode initial “uncertainty” of a neuron in a binary decision, when zz is close to zero, coupled with quick saturation as zz shifts in either direction. The two functions presented here are very similar, with the hyperbolic tangent giving outputs within [−1,1][−1,1], and the logistic function giving outputs within [0,1][0,1] (and therefore being useful for representing probabilities).

In recent years, ReLU activations (and variations thereof) have become ubiquitous in deep learning—they started out as a simple, “engineer’s” way to inject nonlinearity into the model (“if it’s negative, set it to zero”), but turned out to be far more successful than the historically more popular sigmoid activations, and also have been linked to the way physical neurons transmit electrical potential. As such, we will focus exclusively on them in this tutorial.

A neuron is completely specified by its weight vector ⃗ww→, and the key aim of a learning algorithm is to assign appropriate weights to the neuron based on a training set of known input/output pairs, such that the notion of a “predictive error/loss” will be minimised when applying the inputs within the training set to the neuron. One typical example of such a learning algorithm is gradient descent, which will, for a particular loss function E(⃗w)E(w→), update the weight vector in the direction of steepest descent of the loss function, scaled by a learning rate parameter ηη:

⃗w←⃗w−η∂E(⃗w)∂⃗ww→←w→−η∂E(w→)∂w→

The loss function represents our belief of how “incorrect” the neuron is at making predictions under its current parameter values. The simplest such choice of a loss function (that usually works best for general environments) is thesquared error loss; for a particular training example (⃗x,y)(x→,y) it is defined as the squared difference between the ground-truth label yy and the output of the neuron when given ⃗xx→ as input:

E(⃗w)=(y−σ(w0+n∑i=1wixi))2E(w→)=(y−σ(w0+∑i=1nwixi))2

There are many excellent tutorials online that provide a more in-depth overview of gradient descent algorithms—one of which may be found on this website! Here the framework will take care of the optimisation routines for us, and therefore I will not dedicate further attention to them.

Enter neural networks (& deep learning)

Once we have a notion of a neuron, it is possible to connect outputs of neurons to inputs of other neurons, giving rise to neural networks. In general we will focus on feedforward neural networks, where these neurons are typically organised in layers, such that the neurons within a single layer process the outputs of the previous layer. The most potent of such architectures (a multilayer perceptron or MLP) fully connects all outputs of a layer to all the neurons in the following layer, as illustrated below.

![]()

The output neurons’ weights can be updated by direct application of the previously mentioned gradient descent on a given loss function—for other neurons these losses need to be propagated backwards (by applying the chain rule for partial differentiation), thus giving rise to the backpropagation algorithm. Similarly as for the basic gradient descent algorithm, I will not focus on the mathematical derivations of the algorithm here, as the framework will be taking care of it for us.

By Cybenko’s universal approximation theorem, a (wide enough) MLP with a single hidden layer of sigmoid neurons is capable of approximating any continuous real function on a bounded interval; however, the proof of this theorem is not constructive, and therefore does not offer an efficient training algorithm for learning such structures in general. Deep learning represents a response to this: rather than increasing the width, increase the depth; by definition, anyneural network with more than one hidden layer is considered deep.

The shift in depth also often allows us to directly feed raw input data into the network; in the past, single-layer neural networks were ran on features extracted from the input by carefully crafted feature functions. This meant that significantly different approaches were needed for, e.g. the problems of computer vision, speech recognition and natural language processing, impeding scientific collaboration across these fields. However, when a network has multiple hidden layers, it gains the capability to learn the feature functions that best describe the raw data by itself, thus being applicable to end-to-end learning and allowing one to use the same kind of networks across a wide variety of tasks, eliminating the need for designing feature functions from the pipeline. I will demonstrate graphical evidence of this in the second part of this tutorial, when we will explore convolutional neural networks (CNNs).

As this post’s objective, we will implement the simplest possible deep neural network—an MLP with two hidden layers—and apply it on the MNIST handwritten digit recognition task.

Only the following imports are required:

from keras.datasets import mnist

from keras.models import Model

from keras.layers import Input, Dense

from keras.utils import np_utils

Next up, we’ll define some parameters of our model. These are often called hyperparameters, because they are assumed to be fixed before training starts. For the purposes of this tutorial, we will stick to using some sensible values, but keep in mind that properly training them is a significant issue, which will be addressed more properly in a future tutorial.

In particular, we will define:

– The batch size, representing the number of training examples being used simultaneously during a single iteration of the gradient descent algorithm;

– The number of epochs, representing the number of times the training algorithm will iterate over the entire training set before terminating;

– The number of neurons in each of the two hidden layers of the MLP.

batch_size = 128

num_epochs = 20

hidden_size = 512

Now it is time to load and preprocess the MNIST data set. Keras makes this extremely simple, with a fixed interface for fetching and extracting the data from the remote server, directly into NumPy arrays.

To preprocess the input data, we will first flatten the images into 1D (as we will consider each pixel as a separate input feature), and we will then force the pixel intensity values to be in the [0,1][0,1] range by dividing them by 255255. This is a very simple way to “normalise” the data, and I will be discussing other ways in future tutorials in this series.

A good approach to a classification problem is to use probabilistic classification, i.e. to have a single output neuron for each class, outputting a value which corresponds to the probability of the input being of that particular class. This implies a need to transform the training output data into a “one-hot” encoding: for example, if the desired output class is 33, and there are five classes overall (labelled 00 to 44), then an appropriate one-hot encoding is: [0 0 0 1 0][0 0 0 1 0]. Keras, once again, provides us with an out-of-the-box functionality for doing just that.

num_train = 60000

num_test = 10000

height, width, depth = 28, 28, 1

num_classes = 10

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(num_train, height * width)

X_test = X_test.reshape(num_test, height * width)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Y_train = np_utils.to_categorical(y_train, num_classes)

Y_test = np_utils.to_categorical(y_test, num_classes)

Now is the time to actually define our model! To do this we will be using a stack of three Dense layers, which correspond to a fully unrestricted MLP structure, linking all of the outputs of the previous layer to the inputs of the next one. We will use ReLU activations for the neurons in the first two layers, and a softmax activation for the neurons in the final one. This activation is designed to turn any real-valued vector into a vector of probabilities, and is defined as follows, for the jj-th neuron:

σ(⃗z)j=exp(zj)∑iexp(zi)σ(z→)j=exp(zj)∑iexp(zi)

An excellent feature of Keras, that sets it apart from frameworks such as TensorFlow, is automatic inference of shapes; we only need to specify the shape of the input layer, and afterwards Keras will take care of initialising the weight variables with proper shapes. Once all the layers have been defined, we simply need to identify the input(s) and the output(s) in order to define our model, as illustrated below.

inp = Input(shape=(height * width,))

hidden_1 = Dense(hidden_size, activation='relu')(inp)

hidden_2 = Dense(hidden_size, activation='relu')(hidden_1)

out = Dense(num_classes, activation='softmax')(hidden_2)

model = Model(input=inp, output=out)

To finish off specifying the model, we need to define our loss function, the optimisation algorithm to use, and which metrics to report.

When dealing with probabilistic classification, it is actually better to use the cross-entropy loss, rather than the previously defined squared error. For a particular output probability vector ⃗yy→, compared with our (ground truth) one-hot vector ⃗^yy^→, the loss (for kk-class classification) is defined by

L(⃗y,⃗^y)=−k∑i=1^yilnyiL(y→,y^→)=−∑i=1ky^ilnyi

This loss is better for probabilistic tasks (i.e. ones with logistic/softmax output neurons), primarily because of its manner of derivation—it aims only to maximise the model’s confidence in the correct class, and is not concerned with the distribution of probabilities for other classes (while the squared error loss would dedicate equal attention to getting all of the other class probabilities as close to zero as possible). This is due to the fact that incorrect classes, i.e. classes i′i′with ^yi′=0y^i′=0, eliminate the respective neuron’s output from the loss function.

The optimisation algorithm used will typically revolve around some form of gradient descent; their key differences revolve around the manner in which the previously mentioned learning rate, ηη, is chosen or adapted during training. An excellent overview of such approaches is given by this blog post; here we will use the Adam optimiser, which typically performs well.

As our classes are balanced (there is an equal amount of handwritten digits across all ten classes), an appropriate metric to report is the accuracy; the proportion of the inputs classified correctly.

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

Finally, we call the training algorithm with the determined batch size and epoch count. It is good practice to set aside a fraction of the training data to be used just for verification that our algorithm is (still) properly generalising (this is commonly referred to as the validation set); here we will hold out 10%10% of the data for this purpose.

An excellent out-of-the-box feature of Keras is verbosity; it’s able to provide detailed real-time pretty-printing of the training algorithm’s progress.

model.fit(X_train, Y_train,

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1)

model.evaluate(X_test, Y_test, verbose=1)

Train on 54000 samples, validate on 6000 samples

Epoch 1/20

54000/54000 [==============================] - 9s - loss: 0.2295 - acc: 0.9325 - val_loss: 0.1093 - val_acc: 0.9680

Epoch 2/20

54000/54000 [==============================] - 9s - loss: 0.0819 - acc: 0.9746 - val_loss: 0.0922 - val_acc: 0.9708

Epoch 3/20

54000/54000 [==============================] - 11s - loss: 0.0523 - acc: 0.9835 - val_loss: 0.0788 - val_acc: 0.9772

Epoch 4/20

54000/54000 [==============================] - 12s - loss: 0.0371 - acc: 0.9885 - val_loss: 0.0680 - val_acc: 0.9808

Epoch 5/20

54000/54000 [==============================] - 12s - loss: 0.0274 - acc: 0.9909 - val_loss: 0.0772 - val_acc: 0.9787

Epoch 6/20

54000/54000 [==============================] - 12s - loss: 0.0218 - acc: 0.9931 - val_loss: 0.0718 - val_acc: 0.9808

Epoch 7/20

54000/54000 [==============================] - 12s - loss: 0.0204 - acc: 0.9933 - val_loss: 0.0891 - val_acc: 0.9778

Epoch 8/20

54000/54000 [==============================] - 13s - loss: 0.0189 - acc: 0.9936 - val_loss: 0.0829 - val_acc: 0.9795

Epoch 9/20

54000/54000 [==============================] - 14s - loss: 0.0137 - acc: 0.9950 - val_loss: 0.0835 - val_acc: 0.9797

Epoch 10/20

54000/54000 [==============================] - 13s - loss: 0.0108 - acc: 0.9969 - val_loss: 0.0836 - val_acc: 0.9820

Epoch 11/20

54000/54000 [==============================] - 13s - loss: 0.0123 - acc: 0.9960 - val_loss: 0.0866 - val_acc: 0.9798

Epoch 12/20

54000/54000 [==============================] - 13s - loss: 0.0162 - acc: 0.9951 - val_loss: 0.0780 - val_acc: 0.9838

Epoch 13/20

54000/54000 [==============================] - 12s - loss: 0.0093 - acc: 0.9968 - val_loss: 0.1019 - val_acc: 0.9813

Epoch 14/20

54000/54000 [==============================] - 12s - loss: 0.0075 - acc: 0.9976 - val_loss: 0.0923 - val_acc: 0.9818

Epoch 15/20

54000/54000 [==============================] - 12s - loss: 0.0118 - acc: 0.9965 - val_loss: 0.1176 - val_acc: 0.9772

Epoch 16/20

54000/54000 [==============================] - 12s - loss: 0.0119 - acc: 0.9961 - val_loss: 0.0838 - val_acc: 0.9803

Epoch 17/20

54000/54000 [==============================] - 12s - loss: 0.0073 - acc: 0.9976 - val_loss: 0.0808 - val_acc: 0.9837

Epoch 18/20

54000/54000 [==============================] - 13s - loss: 0.0082 - acc: 0.9974 - val_loss: 0.0926 - val_acc: 0.9822

Epoch 19/20

54000/54000 [==============================] - 12s - loss: 0.0070 - acc: 0.9979 - val_loss: 0.0808 - val_acc: 0.9835

Epoch 20/20

54000/54000 [==============================] - 11s - loss: 0.0039 - acc: 0.9987 - val_loss: 0.1010 - val_acc: 0.9822

10000/10000 [==============================] - 1s

[0.099321320021623111, 0.9819]

As can be seen, our model achieves an accuracy of ∼98.2%∼98.2% on the test set; this is quite respectable for such a simple model, despite being outclassed by state-of-the-art approaches enumerated here.

I encourage you to play around with this model: attempt different hyperparameter values/optimisation algorithms/activation functions, add more hidden layers, etc. Eventually, you should be able to achieve accuracies above 99%99%.

Throughout this post we have covered the essentials of deep learning, and successfully implemented a simple two-layer deep MLP in Keras, applying it to MNIST, all in under 30 lines of code.

Next time around, we will explore convolutional neural networks (CNNs), resolving some of the issues posed by applying MLPs to larger image tasks (such as CIFAR-10).

ABOUT THE AUTHOR

![]()

Petar Veličković

Petar is currently a Research Assistant in Computational Biology within the Artificial Intelligence Group of the Cambridge University Computer Laboratory, where he is working on developing machine learning algorithms on complex networks, and their applications to bioinformatics. He is also a PhD student within the group, supervised by Dr Pietro Liò and affiliated with Trinity College. He holds a BA degree in Computer Science from the University of Cambridge, having completed the Computer Science Tripos in 2015.

Just show me the code!

from keras.datasets import mnist

from keras.models import Model

from keras.layers import Input, Dense

from keras.utils import np_utils

batch_size = 128

num_epochs = 20

hidden_size = 512

num_train = 60000

num_test = 10000

height, width, depth = 28, 28, 1

num_classes = 10

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(num_train, height * width)

X_test = X_test.reshape(num_test, height * width)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Y_train = np_utils.to_categorical(y_train, num_classes)

Y_test = np_utils.to_categorical(y_test, num_classes)

inp = Input(shape=(height * width,))

hidden_1 = Dense(hidden_size, activation='relu')(inp)

hidden_2 = Dense(hidden_size, activation='relu')(hidden_1)

out = Dense(num_classes, activation='softmax')(hidden_2)

model = Model(input=inp, output=out)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(X_train, Y_train,

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1)

model.evaluate(X_test, Y_test, verbose=1)

Deep learning for complete beginners: Using convolutional nets to recognise imagesby Cambridge Coding Academy | Download notebook

Welcome to the second in a series of blog posts that is designed to get you quickly up to speed with deep learning; from first principles, all the way to discussions of some of the intricate details, with the purposes of achieving respectable performance on two established machine learning benchmarks: MNIST (classification of handwritten digits) and CIFAR-10 (classification of small images across 10 distinct classes—airplane, automobile, bird, cat, deer, dog, frog, horse, ship & truck).

| MNIST |

CIFAR-10 |

![]() |

![]() |

Last time around, I have introduced the fundamental concepts of deep learning, and illustrated how models can be rapidly developed and prototyped by leveraging the Keras deep learning framework. Ultimately, a two-layer multilayer perceptron (MLP) was applied to MNIST, achieving an accuracy level of 98.2%98.2%, which can be quite easily improved upon. But ultimately, fully connected MLPs will usually not be the model of choice for image-related tasks—it is far more typical to make advantage of a convolutional neural network (CNN) in this case. By the end of this part of the tutoral, you should be capable of understanding and producing a simple CNN in Keras, achieving a respectable level of accuracy on CIFAR-10.

This tutorial will, for the most part, assume familiarity with the previous one in the series.

The previously mentioned multilayer perceptrons represent the most general and powerful feedforward neural network model possible; they are organised in layers, such that every neuron within a layer receives its own copy of all the outputs of the previous layer as its input. This kind of model is perfect for the right kind of problem—learning from a fixed number of (more or less) unstructured parameters.

However, consider what happens to the number of parameters (weights) of such a model when being fed raw image data. CIFAR-10, for example, contains 32×32×332×32×3 coloured images: if we are to treat each channel of each pixel as an independent input to an MLP, each neuron of the first hidden layer adds ∼3000∼3000 new parameters to the model! The situation quickly becomes unmanageable as image sizes grow larger, way before reaching the kind of images people usually want to work with in real applications.

A common solution is to downsample the images to a size where MLPs can safely be applied. However, if we directly downsample the image, we potentially lose a wealth of information; it would be great if we would somehow be able to still do some useful (without causing an explosion in parameter count) processing of the image, prior to performing the downsampling.

It turns out that there is a very efficient way of pulling this off, and it makes advantage of the structure of the information encoded within an image—it is assumed that pixels that are spatially closer together will “cooperate” on forming a particular feature of interest much more than ones on opposite corners of the image. Also, if a particular (smaller) feature is found to be of great importance when defining an image’s label, it will be equally important if this feature was found anywhere within the image, regardless of location.

Enter the convolution operator. Given a two-dimensional image, II, and a small matrix, KK of size h×wh×w, (known as a convolution kernel), which we assume encodes a way of extracting an interesting image feature, we compute the convolved image, I∗KI∗K, by overlaying the kernel on top of the image in all possible ways, and recording the sum of elementwise products between the image and the kernel:

(I∗K)xy=h∑i=1w∑j=1Kij⋅Ix+i−1,y+j−1(I∗K)xy=∑i=1h∑j=1wKij⋅Ix+i−1,y+j−1

(in fact, the exact definition would require us to flip the kernel matrix first, but for the purposes of machine learning it is irrelevant whether this is done)

The images below show a diagrammatical overview of the above formula and the result of applying convolution (with two separate kernels) over an image, to act as an edge detector:

![]()

![]()

The convolution operator forms the fundamental basis of the convolutional layer of a CNN. The layer is completely specified by a certain number of kernels, ⃗KK→ (along with additive biases, ⃗bb→, per each kernel), and it operates by computing the convolution of the output images of a previous layer with each of those kernels, afterwards adding the biases (one per each output image). Finally, an activation function, σσ, may be applied to all of the pixels of the output images. Typically, the input to a convolutional layer will have dd channels (e.g. red/green/blue in the input layer), in which case the kernels are extended to have this number of channels as well, making the final formula of a single output image channel of a convolutional layer (for a kernel KK and bias bb) as follows:

conv(I,K)xy=σ(b+h∑i=1w∑j=1d∑k=1Kijk⋅Ix+i−1,y+j−1,k)conv(I,K)xy=σ(b+∑i=1h∑j=1w∑k=1dKijk⋅Ix+i−1,y+j−1,k)

Note that, since all we’re doing here is addition and scaling of the input pixels, the kernels may be learned from a given training dataset via gradient descent, exactly as the weights of an MLP. In fact, an MLP is perfectly capable of replicating a convolutional layer, but it would require a lot more training time (and data) to learn to approximate that mode of operation.

Finally, let’s just note that a convolutional operator is in no way restricted to two-dimensionally structured data: in fact, most machine learning frameworks (Keras included) will provide you with out-of-the-box layers for 1D and 3D convolutions as well!

It is important to note that, while a convolutional layer significantly decreases the number of parameters compared to a fully connected (FC) layer, it introduces more hyperparameters—parameters whose values need to be chosenbefore training starts.

Namely, the hyperparameters to choose within a single convolutional layer are:

– depth: how many different kernels (and biases) will be convolved with the output of the previous layer;

– height and width of each kernel;

– stride: by how much we shift the kernel in each step to compute the next pixel in the result. This specifies the overlap between individual output pixels, and typically it is set to 11, corresponding to the formula given before. Note that larger strides result in smaller output sizes.

– padding: note that convolution by any kernel larger than 1×11×1 will decrease the output image size—it is often desirable to keep sizes the same, in which case the image is sufficiently padded with zeroes at the edges. This is often called“same” padding, as opposed to “valid” (no) padding. It is possible to add arbitrary levels of padding, but typically the padding of choice will be either same or valid.

As already hinted, convolutions are not typically meant to be the sole operation in a CNN (although there have been promising recent developments on all-convolutional networks); but rather to extract useful features of an image prior to downsampling it sufficiently to be manageable by an MLP.

A very popular approach to downsampling is a pooling layer, which consumes small and (usually) disjoint chunks of the image (typically 2×22×2) and aggregates them into a single value. There are several possible schemes for the aggregation—the most popular being max-pooling, where the maximum pixel value within each chunk is taken. A diagrammatical illustration of 2×22×2 max-pooling is given below.

![]()

Putting it all together: a common CNN

Now that we got all the building blocks, let’s see what a typical convolutional neural network might look like!

![]()

A typical CNN architecture for a kk-class image classification can be split into two distinct parts—a chain of repeating Conv→PoolConv→Pool layers (sometimes with more than one convolutional layer at once), followed by a few fully connected layers (taking each pixel of the computed images as an independent input), culminating in a kk-way softmax layer, to which a cross-entropy loss is optimised. I did not draw the activation functions here to make the sketch clearer, but do keep in mind that typically after every convolutional or fully connected layer, an activation (e.g. ReLU) will be applied to all of the outputs.

Note the effect of a single Conv→PoolConv→Pool pass through the image: it reduces height and width of the individual channels in favour of their number, i.e. depth.

The softmax layer and cross-entropy loss are both introduced in more detail in the previous tutorial. For summarisation purposes, a softmax layer’s purpose is converting any vector of real numbers into a vector of probabilities(nonnegative real values that add up to 1). Within this context, the probabilities correspond to the likelihoods that an input image is a member of a particular class. Minimising the cross-entropy loss has the effect of maximising the model’s confidence in the correct class, without being concerned for the probabilites for other classes—this makes it a more suitable choice for probabilistic tasks compared to, for example, the squared error loss.

Detour: Overfitting, regularisation and dropout

This will be the first (and hopefully the only) time when I will divert your attention to a seemingly unrelated topic. It regards a very important pitfall of machine learning—overfitting a model to the training data. While this is primarily going to be a major topic of the next tutorial in the series, the negative effects of overfitting will tend to become quite noticeable on the networks like the one we are about to build, and we need to introduce a way to properly protect ourselves against it, before going any further. Luckily, there is a very simple technique we can use.

Overfitting corresponds to adapting our model to the training set to such extremes that its generalisation potential (performance on samples outside of the training set) is severely limited. In other words, our model might have learned the training set (along with any noise present within it) perfectly, but it has failed to capture the underlying process that generated it. To illustrate, consider a problem of fitting a sine curve, with white additive noise applied to the data points:

![]()

Here we have a training set (denoted by blue circles) derived from the original sine wave, along with some noise. If we fit a degree-3 polynomial to this data, we get a fairly good approximation to the original curve. Someone might argue that a degree-14 polynomial would do better; indeed, given we have 15 points, such a fit would perfectly describe the training data. However, in this case, the additional parameters of the model cause catastrophic results: to cope with the inherent noise of the data, anywhere except in the closest vicinity of the training points, our fit is completely off.

Deep convolutional neural networks have a large number of parameters, especially in the fully connected layers. Overfitting might often manifest in the following form: if we don’t have sufficiently many training examples, a small group of neurons might become responsible for doing most of the processing and other neurons becoming redundant; or in the other extreme, some neurons might actually become detrimental to performance, with several other neurons of their layer ending up doing nothing else but correcting for their errors.

To help our models generalise better in these circumstances, we introduce techniques of regularisation: rather than reducing the number of parameters, we impose constraints on the model parameters during training to keep them from learning the noise in the training data. The particular method I will introduce here is dropout—a technique that initially might seem like “dark magic”, but actually helps to eliminate exactly the failure modes described above. Namely, dropout with parameter pp will, within a single training iteration, go through all neurons in a particular layer and, with probability pp, completely eliminate them from the network throughout the iteration. This has the effect of forcing the neural network to cope with failures, and not to rely on existence of a particular neuron (or set of neurons)—relying more on a consensus of several neurons within a layer. This is a very simple technique that works quite well already for combatting overfitting on its own, without introducing further regularisers. An illustration is given below.

![]()

Applying a deep CNN to CIFAR-10

As this post’s objective, we will implement a deep convolutional neural network—and apply it on the CIFAR-10 image classification task.

Imports are largely similar to last time, apart from the fact that we will be using a wider variety of layers:

from keras.datasets import cifar10

from keras.models import Model

from keras.layers import Input, Convolution2D, MaxPooling2D, Dense, Dropout, Flatten

from keras.utils import np_utils

import numpy as np

Using Theano backend.

As already mentioned, a CNN will typically have more hyperparameters than an MLP. For the purposes of this tutorial, we will also stick to “sensible” hand-picked values for them, but do still keep in mind that later on I will introduce a more proper method for learning them.

The hyperparameters are:

– The batch size, representing the number of training examples being used simultaneously during a single iteration of the gradient descent algorithm;

– The number of epochs, representing the number of times the training algorithm will iterate over the entire training set before terminating*;

– The kernel sizes in the convolutional layers;

– The pooling size in the pooling layers;

– The number of kernels in the convolutional layers;

– The dropout probability (we will apply dropout after each pooling, and after the fully connected layer);

– The number of neurons in the fully connected layer of the MLP.

* N.B. here I have set the number of epochs to 200, which might be undesirably slow if you do not have a GPU at your disposal (the convolution layers are going to pose a significant performance bottleneck in this case). You might wish to decrease the epoch count and/or numbers of kernels if you are going to be training the network on a CPU.

batch_size = 32

num_epochs = 200

kernel_size = 3

pool_size = 2

conv_depth_1 = 32

conv_depth_2 = 64

drop_prob_1 = 0.25

drop_prob_2 = 0.5

hidden_size = 512

Loading and preprocessing the CIFAR-10 dataset is done in exactly the same way as for MNIST, with Keras routines doing most of the work. The sole difference is that now we do not initially consider each pixel an independent input feature, and therefore we do not reshape the input to 1D. We will once again force the pixel intensity values to be in the [0,1][0,1], and use a one-hot encoding for the output labels.

However, this time around, this stage will be done in a more general way, to allow you to adapt it more easily to new datasets: the sizes will be extracted from the dataset rather than hardcoded, the number of classes is inferred from the number of unique labels in the training set, and the normalisation is performed via division by the maximum value in the training set.

N.B. we will divide the testing set by the maximum of the training set, because our algorithms are not allowed to see the testing data before the learning process is complete, and therefore we are not allowed to compute any statistics on it, other than performing transformations derived entirely from the training set.

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

num_train, depth, height, width = X_train.shape

num_test = X_test.shape[0]

num_classes = np.unique(y_train).shape[0]

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= np.max(X_train)

X_test /= np.max(X_train)

Y_train = np_utils.to_categorical(y_train, num_classes)

Y_test = np_utils.to_categorical(y_test, num_classes)

Modelling time! Our network will consist of four Convolution2D layers, with a MaxPooling2D layer following after the second and the fourth convolution. After the first pooling layer, we double the number of kernels (in line with the previously mentioned principle of sacrificing height and width for more depth). Afterwards, the output of the second pooling layer is flattened to 1D (via the Flatten layer), and passed through two fully connected (Dense) layers. ReLU activations will once again be used for all layers except the output dense layer, which will use a softmax activation (for purposes of probabilistic classification).

To regularise our model, a Dropout layer is applied after each pooling layer, and after the first Dense layer. This is another area where Keras shines compared to other frameworks: it has an internal flag that automatically enables or disables dropout, depending on whether the model is currently used for training or testing.

The remainder of the model specification exactly matches our previous setup for MNIST:

– We use the cross-entropy loss function as the objective to optimise (as its derivation is more appropriate for probabilistic tasks);

– We use the Adam optimiser for gradient descent;

– We report the accuracy of the model (as the dataset is balanced across the ten classes)*;

– We hold out 10% of the data for validation purposes.

* To get a feeling for why accuracy might be inappropriate for unbalanced datasets, consider an extreme case where 90% of the test data belongs to class xx (this could be, for example, the task of diagnosing patients for an extremely rare disease). In this case, a classifier that just outputs xx achieves a seemingly impressive accuracy of 90% on the test data, without really doing any learning/generalisation.

inp = Input(shape=(depth, height, width))

conv_1 = Convolution2D(conv_depth_1, kernel_size, kernel_size, border_mode='same', activation='relu')(inp)

conv_2 = Convolution2D(conv_depth_1, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_1)

pool_1 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_2)

drop_1 = Dropout(drop_prob_1)(pool_1)

conv_3 = Convolution2D(conv_depth_2, kernel_size, kernel_size, border_mode='same', activation='relu')(drop_1)

conv_4 = Convolution2D(conv_depth_2, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_3)

pool_2 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_4)

drop_2 = Dropout(drop_prob_1)(pool_2)

flat = Flatten()(drop_2)

hidden = Dense(hidden_size, activation='relu')(flat)

drop_3 = Dropout(drop_prob_2)(hidden)

out = Dense(num_classes, activation='softmax')(drop_3)

model = Model(input=inp, output=out)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(X_train, Y_train,

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1)

model.evaluate(X_test, Y_test, verbose=1)

Train on 45000 samples, validate on 5000 samples

Epoch 1/200

45000/45000 [==============================] - 9s - loss: 1.5435 - acc: 0.4359 - val_loss: 1.2057 - val_acc: 0.5672

Epoch 2/200

45000/45000 [==============================] - 9s - loss: 1.1544 - acc: 0.5886 - val_loss: 0.9679 - val_acc: 0.6566

Epoch 3/200

45000/45000 [==============================] - 8s - loss: 1.0114 - acc: 0.6418 - val_loss: 0.8807 - val_acc: 0.6870

Epoch 4/200

45000/45000 [==============================] - 8s - loss: 0.9183 - acc: 0.6766 - val_loss: 0.7945 - val_acc: 0.7224

Epoch 5/200

45000/45000 [==============================] - 9s - loss: 0.8507 - acc: 0.6994 - val_loss: 0.7531 - val_acc: 0.7400

Epoch 6/200

45000/45000 [==============================] - 9s - loss: 0.8064 - acc: 0.7161 - val_loss: 0.7174 - val_acc: 0.7496

Epoch 7/200

45000/45000 [==============================] - 9s - loss: 0.7561 - acc: 0.7331 - val_loss: 0.7116 - val_acc: 0.7622

Epoch 8/200

45000/45000 [==============================] - 9s - loss: 0.7156 - acc: 0.7476 - val_loss: 0.6773 - val_acc: 0.7670

Epoch 9/200

45000/45000 [==============================] - 9s - loss: 0.6833 - acc: 0.7594 - val_loss: 0.6855 - val_acc: 0.7644

Epoch 10/200

45000/45000 [==============================] - 9s - loss: 0.6580 - acc: 0.7656 - val_loss: 0.6608 - val_acc: 0.7748

Epoch 11/200

45000/45000 [==============================] - 9s - loss: 0.6308 - acc: 0.7750 - val_loss: 0.6854 - val_acc: 0.7730

Epoch 12/200

45000/45000 [==============================] - 9s - loss: 0.6035 - acc: 0.7832 - val_loss: 0.6853 - val_acc: 0.7744

Epoch 13/200

45000/45000 [==============================] - 9s - loss: 0.5871 - acc: 0.7914 - val_loss: 0.6762 - val_acc: 0.7748

Epoch 14/200

45000/45000 [==============================] - 8s - loss: 0.5693 - acc: 0.8000 - val_loss: 0.6868 - val_acc: 0.7740

Epoch 15/200

45000/45000 [==============================] - 9s - loss: 0.5555 - acc: 0.8036 - val_loss: 0.6835 - val_acc: 0.7792

Epoch 16/200

45000/45000 [==============================] - 9s - loss: 0.5370 - acc: 0.8126 - val_loss: 0.6885 - val_acc: 0.7774

Epoch 17/200

45000/45000 [==============================] - 9s - loss: 0.5270 - acc: 0.8134 - val_loss: 0.6604 - val_acc: 0.7866

Epoch 18/200

45000/45000 [==============================] - 9s - loss: 0.5090 - acc: 0.8194 - val_loss: 0.6652 - val_acc: 0.7860

Epoch 19/200

45000/45000 [==============================] - 9s - loss: 0.5066 - acc: 0.8193 - val_loss: 0.6632 - val_acc: 0.7858

Epoch 20/200

45000/45000 [==============================] - 9s - loss: 0.4938 - acc: 0.8248 - val_loss: 0.6844 - val_acc: 0.7872

Epoch 21/200

45000/45000 [==============================] - 9s - loss: 0.4684 - acc: 0.8361 - val_loss: 0.6861 - val_acc: 0.7904

Epoch 22/200

45000/45000 [==============================] - 9s - loss: 0.4696 - acc: 0.8365 - val_loss: 0.6349 - val_acc: 0.7980

Epoch 23/200

45000/45000 [==============================] - 9s - loss: 0.4584 - acc: 0.8387 - val_loss: 0.6592 - val_acc: 0.7926

Epoch 24/200

45000/45000 [==============================] - 9s - loss: 0.4410 - acc: 0.8443 - val_loss: 0.6822 - val_acc: 0.7876

Epoch 25/200

45000/45000 [==============================] - 8s - loss: 0.4404 - acc: 0.8454 - val_loss: 0.7103 - val_acc: 0.7784

Epoch 26/200

45000/45000 [==============================] - 8s - loss: 0.4276 - acc: 0.8512 - val_loss: 0.6783 - val_acc: 0.7858

Epoch 27/200

45000/45000 [==============================] - 8s - loss: 0.4152 - acc: 0.8542 - val_loss: 0.6657 - val_acc: 0.7944

Epoch 28/200

45000/45000 [==============================] - 9s - loss: 0.4107 - acc: 0.8549 - val_loss: 0.6861 - val_acc: 0.7888

Epoch 29/200

45000/45000 [==============================] - 9s - loss: 0.4115 - acc: 0.8548 - val_loss: 0.6634 - val_acc: 0.7996

Epoch 30/200

45000/45000 [==============================] - 9s - loss: 0.4057 - acc: 0.8586 - val_loss: 0.7166 - val_acc: 0.7896

Epoch 31/200

45000/45000 [==============================] - 9s - loss: 0.3992 - acc: 0.8605 - val_loss: 0.6734 - val_acc: 0.7998

Epoch 32/200

45000/45000 [==============================] - 9s - loss: 0.3863 - acc: 0.8637 - val_loss: 0.7263 - val_acc: 0.7844

Epoch 33/200

45000/45000 [==============================] - 9s - loss: 0.3933 - acc: 0.8644 - val_loss: 0.6953 - val_acc: 0.7860

Epoch 34/200

45000/45000 [==============================] - 9s - loss: 0.3838 - acc: 0.8663 - val_loss: 0.7040 - val_acc: 0.7916

Epoch 35/200

45000/45000 [==============================] - 9s - loss: 0.3800 - acc: 0.8674 - val_loss: 0.7233 - val_acc: 0.7970

Epoch 36/200

45000/45000 [==============================] - 9s - loss: 0.3775 - acc: 0.8697 - val_loss: 0.7234 - val_acc: 0.7922

Epoch 37/200

45000/45000 [==============================] - 9s - loss: 0.3681 - acc: 0.8746 - val_loss: 0.6751 - val_acc: 0.7958

Epoch 38/200

45000/45000 [==============================] - 9s - loss: 0.3679 - acc: 0.8732 - val_loss: 0.7014 - val_acc: 0.7976

Epoch 39/200

45000/45000 [==============================] - 9s - loss: 0.3540 - acc: 0.8769 - val_loss: 0.6768 - val_acc: 0.8022

Epoch 40/200

45000/45000 [==============================] - 9s - loss: 0.3531 - acc: 0.8783 - val_loss: 0.7171 - val_acc: 0.7986

Epoch 41/200

45000/45000 [==============================] - 9s - loss: 0.3545 - acc: 0.8786 - val_loss: 0.7164 - val_acc: 0.7930

Epoch 42/200

45000/45000 [==============================] - 9s - loss: 0.3453 - acc: 0.8799 - val_loss: 0.7078 - val_acc: 0.7994

Epoch 43/200

45000/45000 [==============================] - 8s - loss: 0.3488 - acc: 0.8798 - val_loss: 0.7272 - val_acc: 0.7958

Epoch 44/200

45000/45000 [==============================] - 9s - loss: 0.3471 - acc: 0.8797 - val_loss: 0.7110 - val_acc: 0.7916

Epoch 45/200

45000/45000 [==============================] - 9s - loss: 0.3443 - acc: 0.8810 - val_loss: 0.7391 - val_acc: 0.7952

Epoch 46/200

45000/45000 [==============================] - 9s - loss: 0.3342 - acc: 0.8841 - val_loss: 0.7351 - val_acc: 0.7970

Epoch 47/200

45000/45000 [==============================] - 9s - loss: 0.3311 - acc: 0.8842 - val_loss: 0.7302 - val_acc: 0.8008

Epoch 48/200

45000/45000 [==============================] - 9s - loss: 0.3320 - acc: 0.8868 - val_loss: 0.7145 - val_acc: 0.8002

Epoch 49/200

45000/45000 [==============================] - 9s - loss: 0.3264 - acc: 0.8883 - val_loss: 0.7640 - val_acc: 0.7942

Epoch 50/200

45000/45000 [==============================] - 9s - loss: 0.3247 - acc: 0.8880 - val_loss: 0.7289 - val_acc: 0.7948

Epoch 51/200

45000/45000 [==============================] - 9s - loss: 0.3279 - acc: 0.8886 - val_loss: 0.7340 - val_acc: 0.7910

Epoch 52/200

45000/45000 [==============================] - 9s - loss: 0.3224 - acc: 0.8901 - val_loss: 0.7454 - val_acc: 0.7914

Epoch 53/200

45000/45000 [==============================] - 9s - loss: 0.3219 - acc: 0.8916 - val_loss: 0.7328 - val_acc: 0.8016

Epoch 54/200

45000/45000 [==============================] - 9s - loss: 0.3163 - acc: 0.8919 - val_loss: 0.7442 - val_acc: 0.7996

Epoch 55/200

45000/45000 [==============================] - 9s - loss: 0.3071 - acc: 0.8962 - val_loss: 0.7427 - val_acc: 0.7898

Epoch 56/200

45000/45000 [==============================] - 9s - loss: 0.3158 - acc: 0.8944 - val_loss: 0.7685 - val_acc: 0.7920

Epoch 57/200

45000/45000 [==============================] - 8s - loss: 0.3126 - acc: 0.8942 - val_loss: 0.7717 - val_acc: 0.8062

Epoch 58/200

45000/45000 [==============================] - 9s - loss: 0.3156 - acc: 0.8919 - val_loss: 0.6993 - val_acc: 0.7984

Epoch 59/200

45000/45000 [==============================] - 9s - loss: 0.3030 - acc: 0.8970 - val_loss: 0.7359 - val_acc: 0.8016

Epoch 60/200

45000/45000 [==============================] - 9s - loss: 0.3022 - acc: 0.8969 - val_loss: 0.7427 - val_acc: 0.7954

Epoch 61/200

45000/45000 [==============================] - 9s - loss: 0.3072 - acc: 0.8950 - val_loss: 0.7829 - val_acc: 0.7996

Epoch 62/200

45000/45000 [==============================] - 9s - loss: 0.2977 - acc: 0.8996 - val_loss: 0.8096 - val_acc: 0.7958

Epoch 63/200

45000/45000 [==============================] - 9s - loss: 0.3033 - acc: 0.8983 - val_loss: 0.7424 - val_acc: 0.7972

Epoch 64/200

45000/45000 [==============================] - 9s - loss: 0.2985 - acc: 0.9003 - val_loss: 0.7779 - val_acc: 0.7930

Epoch 65/200

45000/45000 [==============================] - 8s - loss: 0.2931 - acc: 0.9004 - val_loss: 0.7302 - val_acc: 0.8010

Epoch 66/200

45000/45000 [==============================] - 8s - loss: 0.2948 - acc: 0.8994 - val_loss: 0.7861 - val_acc: 0.7900

Epoch 67/200

45000/45000 [==============================] - 9s - loss: 0.2911 - acc: 0.9026 - val_loss: 0.7502 - val_acc: 0.7918

Epoch 68/200

45000/45000 [==============================] - 9s - loss: 0.2951 - acc: 0.9001 - val_loss: 0.7911 - val_acc: 0.7820

Epoch 69/200

45000/45000 [==============================] - 9s - loss: 0.2869 - acc: 0.9026 - val_loss: 0.8025 - val_acc: 0.8024

Epoch 70/200

45000/45000 [==============================] - 8s - loss: 0.2933 - acc: 0.9013 - val_loss: 0.7703 - val_acc: 0.7978

Epoch 71/200

45000/45000 [==============================] - 8s - loss: 0.2902 - acc: 0.9007 - val_loss: 0.7685 - val_acc: 0.7962

Epoch 72/200

45000/45000 [==============================] - 9s - loss: 0.2920 - acc: 0.9025 - val_loss: 0.7412 - val_acc: 0.7956

Epoch 73/200

45000/45000 [==============================] - 8s - loss: 0.2861 - acc: 0.9038 - val_loss: 0.7957 - val_acc: 0.8026

Epoch 74/200

45000/45000 [==============================] - 8s - loss: 0.2785 - acc: 0.9069 - val_loss: 0.7522 - val_acc: 0.8002

Epoch 75/200

45000/45000 [==============================] - 9s - loss: 0.2811 - acc: 0.9064 - val_loss: 0.8181 - val_acc: 0.7902

Epoch 76/200

45000/45000 [==============================] - 9s - loss: 0.2841 - acc: 0.9053 - val_loss: 0.7695 - val_acc: 0.7990

Epoch 77/200

45000/45000 [==============================] - 9s - loss: 0.2853 - acc: 0.9061 - val_loss: 0.7608 - val_acc: 0.7972

Epoch 78/200

45000/45000 [==============================] - 9s - loss: 0.2714 - acc: 0.9080 - val_loss: 0.7534 - val_acc: 0.8034

Epoch 79/200

45000/45000 [==============================] - 9s - loss: 0.2797 - acc: 0.9072 - val_loss: 0.7188 - val_acc: 0.7988

Epoch 80/200

45000/45000 [==============================] - 9s - loss: 0.2682 - acc: 0.9110 - val_loss: 0.7751 - val_acc: 0.7954

Epoch 81/200

45000/45000 [==============================] - 9s - loss: 0.2885 - acc: 0.9038 - val_loss: 0.7711 - val_acc: 0.8010

Epoch 82/200

45000/45000 [==============================] - 9s - loss: 0.2705 - acc: 0.9094 - val_loss: 0.7613 - val_acc: 0.8000

Epoch 83/200

45000/45000 [==============================] - 9s - loss: 0.2738 - acc: 0.9095 - val_loss: 0.8300 - val_acc: 0.7944

Epoch 84/200

45000/45000 [==============================] - 9s - loss: 0.2795 - acc: 0.9066 - val_loss: 0.8001 - val_acc: 0.7912

Epoch 85/200

45000/45000 [==============================] - 9s - loss: 0.2721 - acc: 0.9086 - val_loss: 0.7862 - val_acc: 0.8092

Epoch 86/200

45000/45000 [==============================] - 9s - loss: 0.2752 - acc: 0.9087 - val_loss: 0.7331 - val_acc: 0.7942

Epoch 87/200

45000/45000 [==============================] - 9s - loss: 0.2725 - acc: 0.9089 - val_loss: 0.7999 - val_acc: 0.7914

Epoch 88/200

45000/45000 [==============================] - 9s - loss: 0.2644 - acc: 0.9108 - val_loss: 0.7944 - val_acc: 0.7990

Epoch 89/200

45000/45000 [==============================] - 9s - loss: 0.2725 - acc: 0.9106 - val_loss: 0.7622 - val_acc: 0.8006

Epoch 90/200

45000/45000 [==============================] - 9s - loss: 0.2622 - acc: 0.9129 - val_loss: 0.8172 - val_acc: 0.7988

Epoch 91/200

45000/45000 [==============================] - 9s - loss: 0.2772 - acc: 0.9085 - val_loss: 0.8243 - val_acc: 0.8004

Epoch 92/200

45000/45000 [==============================] - 9s - loss: 0.2609 - acc: 0.9136 - val_loss: 0.7723 - val_acc: 0.7992

Epoch 93/200

45000/45000 [==============================] - 9s - loss: 0.2666 - acc: 0.9129 - val_loss: 0.8366 - val_acc: 0.7932

Epoch 94/200

45000/45000 [==============================] - 9s - loss: 0.2593 - acc: 0.9135 - val_loss: 0.8666 - val_acc: 0.7956

Epoch 95/200

45000/45000 [==============================] - 9s - loss: 0.2692 - acc: 0.9100 - val_loss: 0.8901 - val_acc: 0.7954

Epoch 96/200

45000/45000 [==============================] - 8s - loss: 0.2569 - acc: 0.9160 - val_loss: 0.8515 - val_acc: 0.8006

Epoch 97/200

45000/45000 [==============================] - 8s - loss: 0.2636 - acc: 0.9146 - val_loss: 0.8639 - val_acc: 0.7960

Epoch 98/200

45000/45000 [==============================] - 9s - loss: 0.2693 - acc: 0.9113 - val_loss: 0.7891 - val_acc: 0.7916

Epoch 99/200

45000/45000 [==============================] - 9s - loss: 0.2611 - acc: 0.9144 - val_loss: 0.8650 - val_acc: 0.7928

Epoch 100/200

45000/45000 [==============================] - 9s - loss: 0.2589 - acc: 0.9121 - val_loss: 0.8683 - val_acc: 0.7990

Epoch 101/200

45000/45000 [==============================] - 9s - loss: 0.2601 - acc: 0.9142 - val_loss: 0.9116 - val_acc: 0.8030

Epoch 102/200

45000/45000 [==============================] - 9s - loss: 0.2616 - acc: 0.9138 - val_loss: 0.8229 - val_acc: 0.7928

Epoch 103/200

45000/45000 [==============================] - 9s - loss: 0.2603 - acc: 0.9140 - val_loss: 0.8847 - val_acc: 0.7994

Epoch 104/200

45000/45000 [==============================] - 9s - loss: 0.2579 - acc: 0.9150 - val_loss: 0.9079 - val_acc: 0.8004

Epoch 105/200

45000/45000 [==============================] - 8s - loss: 0.2696 - acc: 0.9127 - val_loss: 0.7450 - val_acc: 0.8002

Epoch 106/200

45000/45000 [==============================] - 9s - loss: 0.2555 - acc: 0.9161 - val_loss: 0.8186 - val_acc: 0.7992

Epoch 107/200

45000/45000 [==============================] - 9s - loss: 0.2631 - acc: 0.9160 - val_loss: 0.8686 - val_acc: 0.7920

Epoch 108/200

45000/45000 [==============================] - 9s - loss: 0.2524 - acc: 0.9178 - val_loss: 0.9136 - val_acc: 0.7956

Epoch 109/200

45000/45000 [==============================] - 9s - loss: 0.2569 - acc: 0.9151 - val_loss: 0.8148 - val_acc: 0.7994

Epoch 110/200

45000/45000 [==============================] - 9s - loss: 0.2586 - acc: 0.9150 - val_loss: 0.8826 - val_acc: 0.7984

Epoch 111/200

45000/45000 [==============================] - 9s - loss: 0.2520 - acc: 0.9155 - val_loss: 0.8621 - val_acc: 0.7980

Epoch 112/200

45000/45000 [==============================] - 9s - loss: 0.2586 - acc: 0.9157 - val_loss: 0.8149 - val_acc: 0.8038

Epoch 113/200

45000/45000 [==============================] - 9s - loss: 0.2623 - acc: 0.9151 - val_loss: 0.8361 - val_acc: 0.7972

Epoch 114/200

45000/45000 [==============================] - 9s - loss: 0.2535 - acc: 0.9177 - val_loss: 0.8618 - val_acc: 0.7970

Epoch 115/200

45000/45000 [==============================] - 8s - loss: 0.2570 - acc: 0.9164 - val_loss: 0.7687 - val_acc: 0.8044

Epoch 116/200

45000/45000 [==============================] - 9s - loss: 0.2501 - acc: 0.9183 - val_loss: 0.8270 - val_acc: 0.7934

Epoch 117/200

45000/45000 [==============================] - 8s - loss: 0.2535 - acc: 0.9182 - val_loss: 0.7861 - val_acc: 0.7986

Epoch 118/200

45000/45000 [==============================] - 9s - loss: 0.2507 - acc: 0.9184 - val_loss: 0.8203 - val_acc: 0.7996

Epoch 119/200

45000/45000 [==============================] - 9s - loss: 0.2530 - acc: 0.9173 - val_loss: 0.8294 - val_acc: 0.7904

Epoch 120/200

45000/45000 [==============================] - 9s - loss: 0.2599 - acc: 0.9160 - val_loss: 0.8458 - val_acc: 0.7902

Epoch 121/200

45000/45000 [==============================] - 9s - loss: 0.2483 - acc: 0.9164 - val_loss: 0.7573 - val_acc: 0.7976

Epoch 122/200

45000/45000 [==============================] - 8s - loss: 0.2492 - acc: 0.9190 - val_loss: 0.8435 - val_acc: 0.8012

Epoch 123/200

45000/45000 [==============================] - 9s - loss: 0.2528 - acc: 0.9179 - val_loss: 0.8594 - val_acc: 0.7964

Epoch 124/200

45000/45000 [==============================] - 9s - loss: 0.2581 - acc: 0.9173 - val_loss: 0.9037 - val_acc: 0.7944

Epoch 125/200

45000/45000 [==============================] - 8s - loss: 0.2404 - acc: 0.9212 - val_loss: 0.7893 - val_acc: 0.7976

Epoch 126/200

45000/45000 [==============================] - 8s - loss: 0.2492 - acc: 0.9177 - val_loss: 0.8679 - val_acc: 0.7982

Epoch 127/200

45000/45000 [==============================] - 8s - loss: 0.2483 - acc: 0.9196 - val_loss: 0.8894 - val_acc: 0.7956

Epoch 128/200

45000/45000 [==============================] - 9s - loss: 0.2539 - acc: 0.9176 - val_loss: 0.8413 - val_acc: 0.8006

Epoch 129/200

45000/45000 [==============================] - 8s - loss: 0.2477 - acc: 0.9184 - val_loss: 0.8151 - val_acc: 0.7982

Epoch 130/200

45000/45000 [==============================] - 9s - loss: 0.2586 - acc: 0.9188 - val_loss: 0.8173 - val_acc: 0.7954

Epoch 131/200

45000/45000 [==============================] - 9s - loss: 0.2498 - acc: 0.9189 - val_loss: 0.8539 - val_acc: 0.7996

Epoch 132/200

45000/45000 [==============================] - 9s - loss: 0.2426 - acc: 0.9190 - val_loss: 0.8543 - val_acc: 0.7952

Epoch 133/200

45000/45000 [==============================] - 9s - loss: 0.2460 - acc: 0.9185 - val_loss: 0.8665 - val_acc: 0.8008

Epoch 134/200

45000/45000 [==============================] - 9s - loss: 0.2436 - acc: 0.9216 - val_loss: 0.8933 - val_acc: 0.7950

Epoch 135/200

45000/45000 [==============================] - 8s - loss: 0.2468 - acc: 0.9203 - val_loss: 0.8270 - val_acc: 0.7940

Epoch 136/200

45000/45000 [==============================] - 9s - loss: 0.2479 - acc: 0.9194 - val_loss: 0.8365 - val_acc: 0.8052

Epoch 137/200

45000/45000 [==============================] - 9s - loss: 0.2449 - acc: 0.9206 - val_loss: 0.7964 - val_acc: 0.8018

Epoch 138/200

45000/45000 [==============================] - 9s - loss: 0.2440 - acc: 0.9220 - val_loss: 0.8784 - val_acc: 0.7914

Epoch 139/200

45000/45000 [==============================] - 9s - loss: 0.2485 - acc: 0.9198 - val_loss: 0.8259 - val_acc: 0.7852

Epoch 140/200

45000/45000 [==============================] - 9s - loss: 0.2482 - acc: 0.9204 - val_loss: 0.8954 - val_acc: 0.7960

Epoch 141/200

45000/45000 [==============================] - 9s - loss: 0.2344 - acc: 0.9249 - val_loss: 0.8708 - val_acc: 0.7874

Epoch 142/200

45000/45000 [==============================] - 9s - loss: 0.2476 - acc: 0.9204 - val_loss: 0.9190 - val_acc: 0.7954

Epoch 143/200

45000/45000 [==============================] - 9s - loss: 0.2415 - acc: 0.9223 - val_loss: 0.9607 - val_acc: 0.7960

Epoch 144/200

45000/45000 [==============================] - 9s - loss: 0.2377 - acc: 0.9232 - val_loss: 0.8987 - val_acc: 0.7970

Epoch 145/200

45000/45000 [==============================] - 9s - loss: 0.2481 - acc: 0.9201 - val_loss: 0.8611 - val_acc: 0.8048

Epoch 146/200

45000/45000 [==============================] - 9s - loss: 0.2504 - acc: 0.9197 - val_loss: 0.8411 - val_acc: 0.7938

Epoch 147/200

45000/45000 [==============================] - 9s - loss: 0.2450 - acc: 0.9216 - val_loss: 0.7839 - val_acc: 0.8028

Epoch 148/200

45000/45000 [==============================] - 9s - loss: 0.2327 - acc: 0.9250 - val_loss: 0.8910 - val_acc: 0.8054

Epoch 149/200

45000/45000 [==============================] - 9s - loss: 0.2432 - acc: 0.9219 - val_loss: 0.8568 - val_acc: 0.8000

Epoch 150/200

45000/45000 [==============================] - 9s - loss: 0.2436 - acc: 0.9236 - val_loss: 0.9061 - val_acc: 0.7938

Epoch 151/200

45000/45000 [==============================] - 9s - loss: 0.2434 - acc: 0.9222 - val_loss: 0.8439 - val_acc: 0.7986

Epoch 152/200

45000/45000 [==============================] - 9s - loss: 0.2439 - acc: 0.9225 - val_loss: 0.9002 - val_acc: 0.7994

Epoch 153/200

45000/45000 [==============================] - 8s - loss: 0.2373 - acc: 0.9237 - val_loss: 0.8756 - val_acc: 0.7880

Epoch 154/200

45000/45000 [==============================] - 8s - loss: 0.2359 - acc: 0.9238 - val_loss: 0.8514 - val_acc: 0.7936

Epoch 155/200

45000/45000 [==============================] - 9s - loss: 0.2435 - acc: 0.9222 - val_loss: 0.8377 - val_acc: 0.8080

Epoch 156/200

45000/45000 [==============================] - 9s - loss: 0.2478 - acc: 0.9204 - val_loss: 0.8831 - val_acc: 0.7992

Epoch 157/200

45000/45000 [==============================] - 9s - loss: 0.2337 - acc: 0.9253 - val_loss: 0.8453 - val_acc: 0.7994

Epoch 158/200

45000/45000 [==============================] - 9s - loss: 0.2336 - acc: 0.9257 - val_loss: 0.9027 - val_acc: 0.7882

Epoch 159/200

45000/45000 [==============================] - 9s - loss: 0.2384 - acc: 0.9230 - val_loss: 0.9121 - val_acc: 0.8016

Epoch 160/200

45000/45000 [==============================] - 9s - loss: 0.2481 - acc: 0.9217 - val_loss: 0.9495 - val_acc: 0.7974

Epoch 161/200

45000/45000 [==============================] - 9s - loss: 0.2450 - acc: 0.9224 - val_loss: 0.8510 - val_acc: 0.7884

Epoch 162/200

45000/45000 [==============================] - 9s - loss: 0.2433 - acc: 0.9220 - val_loss: 0.8979 - val_acc: 0.7948

Epoch 163/200

45000/45000 [==============================] - 9s - loss: 0.2339 - acc: 0.9262 - val_loss: 0.8979 - val_acc: 0.7978

Epoch 164/200

45000/45000 [==============================] - 9s - loss: 0.2298 - acc: 0.9257 - val_loss: 0.9036 - val_acc: 0.7990

Epoch 165/200

45000/45000 [==============================] - 9s - loss: 0.2404 - acc: 0.9236 - val_loss: 0.8341 - val_acc: 0.8052

Epoch 166/200

45000/45000 [==============================] - 9s - loss: 0.2402 - acc: 0.9227 - val_loss: 0.8731 - val_acc: 0.7996

Epoch 167/200

45000/45000 [==============================] - 9s - loss: 0.2367 - acc: 0.9250 - val_loss: 0.9218 - val_acc: 0.7992

Epoch 168/200

45000/45000 [==============================] - 9s - loss: 0.2267 - acc: 0.9262 - val_loss: 0.8767 - val_acc: 0.7922

Epoch 169/200

45000/45000 [==============================] - 9s - loss: 0.2336 - acc: 0.9254 - val_loss: 0.8418 - val_acc: 0.8038

Epoch 170/200

45000/45000 [==============================] - 9s - loss: 0.2434 - acc: 0.9232 - val_loss: 0.8362 - val_acc: 0.7920

Epoch 171/200

45000/45000 [==============================] - 9s - loss: 0.2328 - acc: 0.9265 - val_loss: 0.8712 - val_acc: 0.7950

Epoch 172/200

45000/45000 [==============================] - 9s - loss: 0.2346 - acc: 0.9262 - val_loss: 0.9256 - val_acc: 0.7976

Epoch 173/200

45000/45000 [==============================] - 8s - loss: 0.2382 - acc: 0.9242 - val_loss: 0.8875 - val_acc: 0.7982

Epoch 174/200

45000/45000 [==============================] - 9s - loss: 0.2400 - acc: 0.9239 - val_loss: 0.8264 - val_acc: 0.7864

Epoch 175/200

45000/45000 [==============================] - 9s - loss: 0.2334 - acc: 0.9261 - val_loss: 0.9178 - val_acc: 0.8014

Epoch 176/200

45000/45000 [==============================] - 9s - loss: 0.2427 - acc: 0.9219 - val_loss: 0.8458 - val_acc: 0.7920

Epoch 177/200

45000/45000 [==============================] - 9s - loss: 0.2310 - acc: 0.9257 - val_loss: 0.9171 - val_acc: 0.8062

Epoch 178/200

45000/45000 [==============================] - 9s - loss: 0.2310 - acc: 0.9265 - val_loss: 0.8544 - val_acc: 0.7990

Epoch 179/200

45000/45000 [==============================] - 9s - loss: 0.2378 - acc: 0.9240 - val_loss: 0.9259 - val_acc: 0.8000

Epoch 180/200

45000/45000 [==============================] - 9s - loss: 0.2381 - acc: 0.9242 - val_loss: 0.8573 - val_acc: 0.8056

Epoch 181/200

45000/45000 [==============================] - 9s - loss: 0.2231 - acc: 0.9297 - val_loss: 0.8935 - val_acc: 0.8002

Epoch 182/200

45000/45000 [==============================] - 9s - loss: 0.2419 - acc: 0.9248 - val_loss: 1.0145 - val_acc: 0.7900

Epoch 183/200

45000/45000 [==============================] - 9s - loss: 0.2336 - acc: 0.9266 - val_loss: 0.8838 - val_acc: 0.8006

Epoch 184/200

45000/45000 [==============================] - 9s - loss: 0.2429 - acc: 0.9242 - val_loss: 0.8685 - val_acc: 0.7918

Epoch 185/200

45000/45000 [==============================] - 9s - loss: 0.2317 - acc: 0.9260 - val_loss: 0.8297 - val_acc: 0.7942

Epoch 186/200

45000/45000 [==============================] - 9s - loss: 0.2330 - acc: 0.9264 - val_loss: 0.8831 - val_acc: 0.8026

Epoch 187/200

45000/45000 [==============================] - 9s - loss: 0.2353 - acc: 0.9254 - val_loss: 0.8934 - val_acc: 0.7956

Epoch 188/200

45000/45000 [==============================] - 9s - loss: 0.2312 - acc: 0.9247 - val_loss: 0.9275 - val_acc: 0.8042

Epoch 189/200

45000/45000 [==============================] - 9s - loss: 0.2239 - acc: 0.9282 - val_loss: 0.9246 - val_acc: 0.7934

Epoch 190/200

45000/45000 [==============================] - 9s - loss: 0.2349 - acc: 0.9253 - val_loss: 0.8628 - val_acc: 0.8000

Epoch 191/200

45000/45000 [==============================] - 9s - loss: 0.2313 - acc: 0.9266 - val_loss: 0.9020 - val_acc: 0.7978

Epoch 192/200

45000/45000 [==============================] - 9s - loss: 0.2358 - acc: 0.9254 - val_loss: 0.9481 - val_acc: 0.7966

Epoch 193/200

45000/45000 [==============================] - 9s - loss: 0.2298 - acc: 0.9276 - val_loss: 0.8791 - val_acc: 0.8010

Epoch 194/200

45000/45000 [==============================] - 9s - loss: 0.2279 - acc: 0.9265 - val_loss: 0.8890 - val_acc: 0.7976

Epoch 195/200

45000/45000 [==============================] - 9s - loss: 0.2330 - acc: 0.9273 - val_loss: 0.8893 - val_acc: 0.7890

Epoch 196/200

45000/45000 [==============================] - 9s - loss: 0.2416 - acc: 0.9243 - val_loss: 0.9002 - val_acc: 0.7922

Epoch 197/200

45000/45000 [==============================] - 9s - loss: 0.2309 - acc: 0.9273 - val_loss: 0.9232 - val_acc: 0.7990

Epoch 198/200

45000/45000 [==============================] - 9s - loss: 0.2247 - acc: 0.9278 - val_loss: 0.9474 - val_acc: 0.7980

Epoch 199/200

45000/45000 [==============================] - 9s - loss: 0.2335 - acc: 0.9256 - val_loss: 0.9177 - val_acc: 0.8000

Epoch 200/200

45000/45000 [==============================] - 9s - loss: 0.2378 - acc: 0.9254 - val_loss: 0.9205 - val_acc: 0.7966

9984/10000 [============================>.] - ETA: 0s

[0.97292723369598388, 0.7853]

This model achieves an accuracy of ∼78.6%∼78.6% on the test set; for such a difficult task (*where human performance is only around 94%94%*), and given the relative simplicity of this model, this is a respectable result. However, more sophisticated models have recently been able to get as far as 96.53%96.53%.

I appreciate that tinkering with this model might be cumbersome if you do not have a GPU in your possession. I would, however, encourage you to apply a similar model to the previously discussed MNIST dataset; you should be able to break 99.3%99.3% accuracy on its test set with little to no effort using a CNN with dropout.

Throughout this post we have covered the essentials of convolutional neural networks, introduced the problem of overfitting, and made a very brief dent into how it could be rectified via regularisation (by applying dropout) and successfully implemented a four-layer deep CNN in Keras, applying it to CIFAR-10, all in under 50 lines of code.

Next time around, we will focus on some assorted topics, tips and tricks that should help you when fine-tuning models at this scale, and extracting more power out of your models while keeping overfitting in check.

ABOUT THE AUTHOR

![]()

Petar Veličković

Petar is currently a Research Assistant in Computational Biology within the Artificial Intelligence Group of the Cambridge University Computer Laboratory, where he is working on developing machine learning algorithms on complex networks, and their applications to bioinformatics. He is also a PhD student within the group, supervised by Dr Pietro Liò and affiliated with Trinity College. He holds a BA degree in Computer Science from the University of Cambridge, having completed the Computer Science Tripos in 2015.

Just show me the code!

from keras.datasets import cifar10

from keras.models import Model

from keras.layers import Input, Convolution2D, MaxPooling2D, Dense, Dropout, Activation, Flatten

from keras.utils import np_utils

import numpy as np

batch_size = 32

num_epochs = 200

kernel_size = 3

pool_size = 2

conv_depth_1 = 32

conv_depth_2 = 64

drop_prob_1 = 0.25

drop_prob_2 = 0.5

hidden_size = 512

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

num_train, depth, height, width = X_train.shape

num_test = X_test.shape[0]

num_classes = np.unique(y_train).shape[0]

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= np.max(X_train)

X_test /= np.max(X_train)

Y_train = np_utils.to_categorical(y_train, num_classes)

Y_test = np_utils.to_categorical(y_test, num_classes)

inp = Input(shape=(depth, height, width))

conv_1 = Convolution2D(conv_depth_1, kernel_size, kernel_size, border_mode='same', activation='relu')(inp)

conv_2 = Convolution2D(conv_depth_1, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_1)

pool_1 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_2)

drop_1 = Dropout(drop_prob_1)(pool_1)

conv_3 = Convolution2D(conv_depth_2, kernel_size, kernel_size, border_mode='same', activation='relu')(drop_1)

conv_4 = Convolution2D(conv_depth_2, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_3)

pool_2 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_4)

drop_2 = Dropout(drop_prob_1)(pool_2)

flat = Flatten()(drop_2)

hidden = Dense(hidden_size, activation='relu')(flat)

drop_3 = Dropout(drop_prob_2)(hidden)

out = Dense(num_classes, activation='softmax')(drop_3)

model = Model(input=inp, output=out)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(X_train, Y_train,

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1)

model.evaluate(X_test, Y_test, verbose=1)

Deep learning for complete beginners: neural network fine-tuning techniquesby Cambridge Coding Academy | Download notebook

Welcome to the third (and final) in a series of blog posts that is designed to get you quickly up to speed with deep learning; from first principles, all the way to discussions of some of the intricate details, with the purposes of achieving respectable performance on two established machine learning benchmarks: MNIST (classification of handwritten digits) and CIFAR-10 (classification of small images across 10 distinct classes—airplane, automobile, bird, cat, deer, dog, frog, horse, ship & truck).

| MNIST |

CIFAR-10 |

![]() |

![]() |

Last time around, I have introduced the convolutional neural network model, and illustrated how, combined with a simple but effective regularisation method of dropout, it may quickly achieve an accuracy level of 78.6%78.6% on CIFAR-10, leveraging the Keras deep learning framework.

By now, you have acquired the fundamental skills necessary to apply deep learning to most problems of interest (a notable exception, outside of the scope of these tutorials, is the problem of processing time-series of arbitrary length, for which a recurrent neural network (RNN) model is often preferable). In this tutorial, I will wrap up with an important but often overlooked aspect of tutorials such as this one—the tips and tricks for properly fine-tuning a model, to make it generalise better than the initial baseline you started out with.

This tutorial will, for the most part, assume familiarity with the previous two in the series.

Typically, the design process for neural networks starts off by designing a simple network, either directly applying architectures that have shown successes for similar problems, or trying out hyperparameter values that generally seem effective. Eventually, we will hopefully attain performance values that seem like a nice baseline starting point, after which we may look into modifying every fixed detail in order to extract the maximal performance capacity out of the network. This is commonly known as hyperparameter tuning, because it involves modifying the components of the network which need to be specified before training.

While the methods described here can yield far more tangible improvements on CIFAR-10, due to the relative difficulty of rapid prototyping on it without a GPU, we will focus specifically on improving performance on the MNIST benchmark. Of course, I do invite you to have a go at applying methods like these to CIFAR-10 and see the kinds of gains you may achieve compared to the basic CNN approach, should your resources allow for it.

We will start off with the baseline CNN given below. If you find any aspects of this code unclear, I invite you to familiarise yourself with the previous two tutorials in the series—all the relevant concepts have already been introduced there.

from keras.datasets import mnist

from keras.models import Model

from keras.layers import Input, Dense, Flatten, Convolution2D, MaxPooling2D, Dropout

from keras.utils import np_utils

batch_size = 128

num_epochs = 12

kernel_size = 3

pool_size = 2

conv_depth = 32

drop_prob_1 = 0.25

drop_prob_2 = 0.5

hidden_size = 128

num_train = 60000

num_test = 10000

height, width, depth = 28, 28, 1

num_classes = 10

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(X_train.shape[0], depth, height, width)

X_test = X_test.reshape(X_test.shape[0], depth, height, width)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Y_train = np_utils.to_categorical(y_train, num_classes)

Y_test = np_utils.to_categorical(y_test, num_classes)

inp = Input(shape=(depth, height, width))

conv_1 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', activation='relu')(inp)

conv_2 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_1)

pool_1 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_2)

drop_1 = Dropout(drop_prob_1)(pool_1)

flat = Flatten()(drop_1)

hidden = Dense(hidden_size, activation='relu')(flat)

drop = Dropout(drop_prob_2)(hidden)

out = Dense(num_classes, activation='softmax')(drop)

model = Model(input=inp, output=out)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(X_train, Y_train,

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1)

model.evaluate(X_test, Y_test, verbose=1)

Train on 54000 samples, validate on 6000 samples

Epoch 1/12

54000/54000 [==============================] - 4s - loss: 0.3010 - acc: 0.9073 - val_loss: 0.0612 - val_acc: 0.9825

Epoch 2/12

54000/54000 [==============================] - 4s - loss: 0.1010 - acc: 0.9698 - val_loss: 0.0400 - val_acc: 0.9893

Epoch 3/12

54000/54000 [==============================] - 4s - loss: 0.0753 - acc: 0.9775 - val_loss: 0.0376 - val_acc: 0.9903

Epoch 4/12

54000/54000 [==============================] - 4s - loss: 0.0629 - acc: 0.9809 - val_loss: 0.0321 - val_acc: 0.9913

Epoch 5/12

54000/54000 [==============================] - 4s - loss: 0.0520 - acc: 0.9837 - val_loss: 0.0346 - val_acc: 0.9902

Epoch 6/12

54000/54000 [==============================] - 4s - loss: 0.0466 - acc: 0.9850 - val_loss: 0.0361 - val_acc: 0.9912

Epoch 7/12

54000/54000 [==============================] - 4s - loss: 0.0405 - acc: 0.9871 - val_loss: 0.0330 - val_acc: 0.9917

Epoch 8/12

54000/54000 [==============================] - 4s - loss: 0.0386 - acc: 0.9879 - val_loss: 0.0326 - val_acc: 0.9908

Epoch 9/12

54000/54000 [==============================] - 4s - loss: 0.0349 - acc: 0.9894 - val_loss: 0.0369 - val_acc: 0.9908

Epoch 10/12

54000/54000 [==============================] - 4s - loss: 0.0315 - acc: 0.9901 - val_loss: 0.0277 - val_acc: 0.9923

Epoch 11/12

54000/54000 [==============================] - 4s - loss: 0.0287 - acc: 0.9906 - val_loss: 0.0346 - val_acc: 0.9922

Epoch 12/12

54000/54000 [==============================] - 4s - loss: 0.0273 - acc: 0.9909 - val_loss: 0.0264 - val_acc: 0.9930

9888/10000 [============================>.] - ETA: 0s

[0.026324689089493085, 0.99119999999999997]

As can be seen, our model achieves an accuracy level of 99.12%99.12% on the test set. This is slightly better than the MLP model explored in the first tutorial, but it should be easy to do even better!

The core of this tutorial will explore common ways in which a baseline neural network such as this one can often be improved (we will keep the basic CNN architecture fixed), after which we will evaluate the relative gains we have achieved.

L2L2 regularisation

As already detailedly explained in the previous tutorial, one of the primary pitfalls of machine learning is overfitting, when the model sometimes catastrophically sacrifices generalisation performance for the purposes of minimising training loss.

![]()

Previously, we introduced dropout as a very simple way to keep overfitting in check.